- Google Deepmind released its most capable multimodal AI model called Gemini, which comes in three sizes: Ultra, Pro, and Nano.

- Gemini Ultra is on par with OpenAI's GPT-4. The Gemini Ultra model beats GPT-4 Vision in multimodal tests.

- Gemini Pro is already live on Google Bard and Pixel phones are getting features powered by Gemini Nano. Gemini Ultra will launch early next year.

At the Google I/O 2023 conference in June, the company showed us a glimpse of Gemini, its most-capable AI model. And finally, before the end of 2023, Google released the Gemini AI models to the public. Google is calling it “the Gemini era” as it’s a significant milestone for the company. But what exactly is Google Gemini AI and can it dethrone the long-reigning king, GPT-4? To find out, let’s go through our detailed explainer on the Gemini AI models.

What is Google Gemini AI?

Gemini is the latest and most capable large language model (LLM) developed by the Google Deepmind team, a subsidiary of Google, headquartered in London. It launches as a successor to the PaLM 2 model, which was developed by the in-house Google AI division. This is the first time we’re seeing a full-fledged AI system released to the public from the Deepmind team.

It’s important to note that Google merged its Google Brain division and the Deepmind team in April 2023 to come up with a powerful model that can compete against OpenAI’s best models. And Gemini is the culmination of that joint effort.

Now coming to the vital question, what sets apart Gemini AI from OpenAI’s GPT-4 or its own PaLM 2 model? Well, to begin with, Gemini is truly a multimodal model. Although PaLM 2 supported image analysis, it relied on Google Lens and semantic analysis to infer data points from an uploaded image. Basically, it was a stopgap arrangement by Google to bring image support to Bard.

With respect to GPT-4 which is also a multimodal model, Gemini AI is different here too. In our detailed article on the upcoming GPT-5 model, we explained that GPT-4 is not one dense model. Instead, it’s based on the “Mixture of Experts” architecture with 16 different models stitched together for different tasks. So for varied tasks like image analysis, image generation, and voice processing, it has different models like GPT-4 Vision, Dall -E, Whisper, etc.

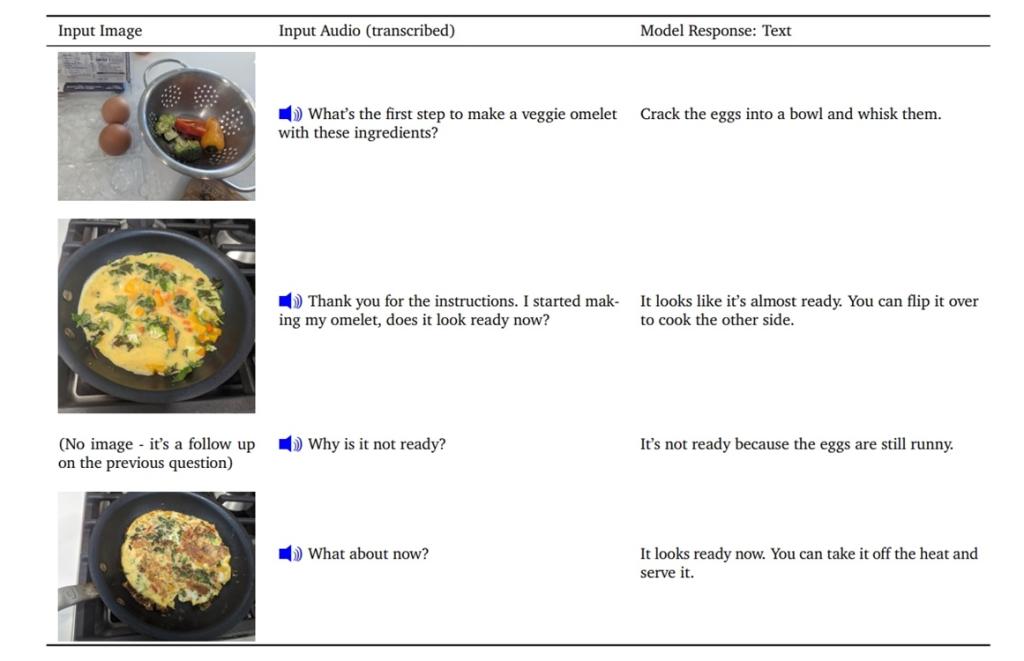

And that’s where Google Gemini is distinct from other multimodal models. Gemini is a “natively multimodal AI model,” and it has been designed from the ground up to be a multimodal model with text, image, audio, video, and code, all trained together to form a powerful AI system.

Due to Gemini’s native multimodal capability, it can simultaneously process information across different modalities seamlessly.

If you are wondering, what difference does that make for an end user like you? Well, there are tons of advantages to having a native multimodal AI system, and we have discussed below in detail. But before that, let’s dive into Gemini’s multimodal capability.

Gemini AI is Truly Multimodal

To understand how Gemini AI is distinct from other multimodal models, let’s take an example of audio processing. One of the popular speech recognition models offered today is OpenAI’s Whisper v3. It can recognize multilingual speech, identify the language, transcribe the speech, and perform translation as well. However, what it can’t do is identify the tone and tenor and subtle nuances of the audio like pronunciation.

Someone might be sad or happy while saying “hello,” but Whisper can’t decipher the mood of the speaker because it’s just transcribing the audio. But Gemini, on the other hand, can process the raw audio signal end-to-end to capture the nuances and mood as well. Google’s AI model can differentiate pronunciations in different languages and transcribe with proper annotation. This makes Gemini AI a more capable multimodal system.

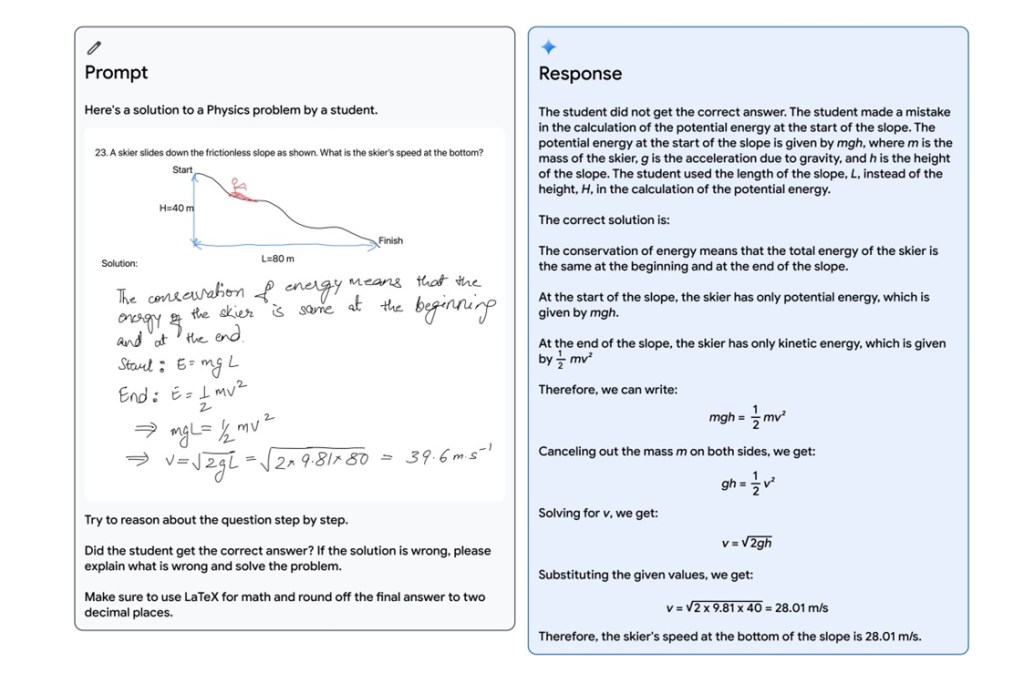

Apart from that, Gemini can both analyze and generate images (likely with Imagen 2 built-in). In visual analysis, Gemini is outstanding. It can find connections between images, guess movies from stills, turn images into code, understand the environment around you, evaluate handwritten texts, explain the reasoning in math and physics problems, and much more. This will likely stand true even though Google faked the Gemini AI demo.

Not to forget, it can process and understand videos as well. Coming to coding, Gemini AI supports most programming languages including popular languages like Python, Java, C++, Go, etc. It’s much better than PaLM 2 in solving complex coding problems. Gemini can solve about 75% of Python functions on the first try whereas PaLM 2 could solve only 45%. And if the user prompts back with some debug input, the solve rate goes above 90%.

Besides that, Google has created a specialized version of Gemini for advanced code generation, and it has been dubbed AlphaCode 2. It excels at competitive programming and can solve incredibly tough problems that involve complex maths and theoretical computer science. When compared to human competitors, AlphaCode 2 beats 85% of participants in competitive programming.

Overall, Google Gemini is a remarkable multimodal AI system for several use cases including textual generation/ reasoning, image analysis, code generation, audio processing, and video understanding.

Gemini AI Comes in Three Flavors

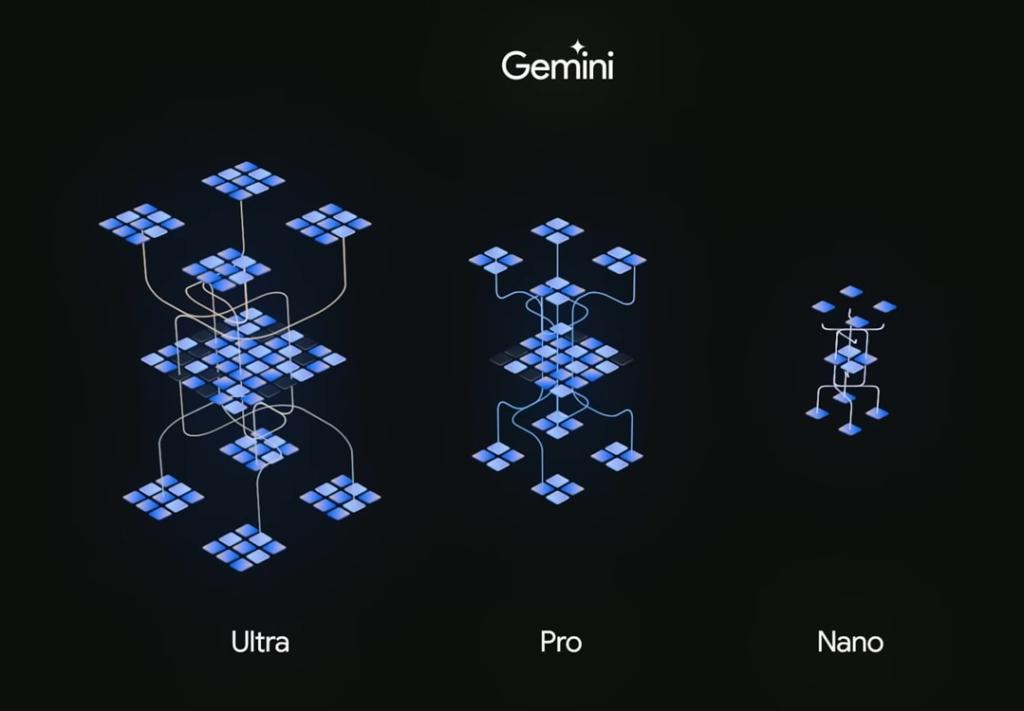

Google has announced Gemini AI in three variants – Ultra, Pro, and Nano – but has not disclosed their parameter size. Gemini Ultra, which is closest to the GPT-4 model, is Google’s largest and most capable model with a full suite of multimodal capabilities. According to the company, the Ultra model is best suited for highly complex and incredibly challenging tasks.

That said, the Gemini Ultra model has not been released yet. The company says Ultra will be going through rigorous trust and safety checks and it will be launched early next year to developers and enterprise customers.

In addition, Google will launch Bard Advanced for consumers to experience Gemini Ultra with full multimodal capabilities early next year. Users are likely to get access to AlphaCode 2 as well.

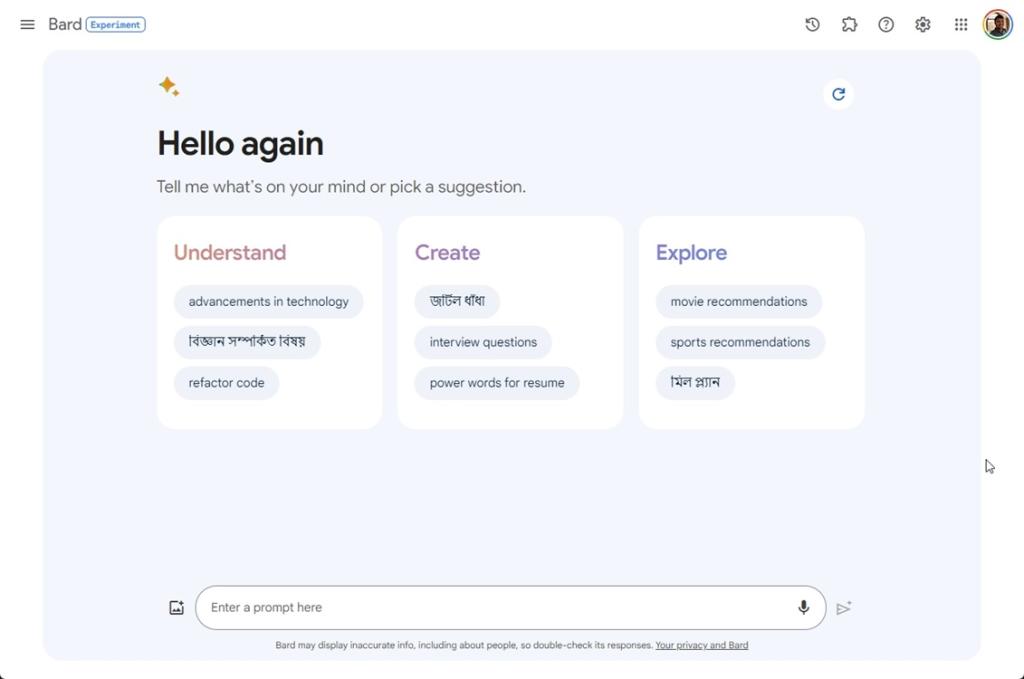

Coming to Gemini Pro, it is already live on ChatGPT alternative Google Bard, and the transition from PaLM 2 to Gemini Pro will be completed by December end.

The Pro model is designed for a broad range of tasks, and it beats OpenAI’s GPT-3.5 model on several benchmarks (more on this below). Google has also released APIs for the Gemini Pro model including both text and vision models.

Currently, the Gemini Pro model is only available in English in over 170 countries around the world. Furthermore, multimodal support to Gemini Pro and new language support will be added to Bard shortly. Furthermore, Google says Gemini will be integrated into more Google products in the coming months including Search, Chrome, Ads, and Duet AI.

Finally, the smallest Gemini Nano model has already arrived on the Pixel 8 Pro and will be added to other Pixel devices as well. The Nano model has been designed for an on-device, private, and personalized AI experience on smartphones.

It’s powering features like Summarize in the Recorder app, and Smart Reply in Gboard, starting with WhatsApp, Line, and KakaoTalk. Support for other messaging apps will be added early next year.

Google Gemini AI is Efficient to Run

Now, coming to the advantages of having a native multimodal AI system, first off, it’s much faster and more efficient to run the model and scale the product for millions of users. We already know that OpenAI’s GPT-4 is relatively slower to run and recently, the company paused its ChatGPT Plus subscription to meet the hardware requirement. Running various text-only, vision-only, audio-only models and combining them in a sub-optimal way elevates the cost of the overall infrastructure. In the end, it hampers the user experience.

Google in its blog post says that Gemini is running on its most efficient TPU system (v4 and v5e), which is significantly faster and scalable. Running the Gemini model on AI accelerators is faster and cheaper than the older PaLM 2 model. Therefore, having a native multimodal model has numerous advantages and it allows Google to serve millions of users, keeping the compute cost low.

Gemini Ultra vs GPT-4: Benchmarks

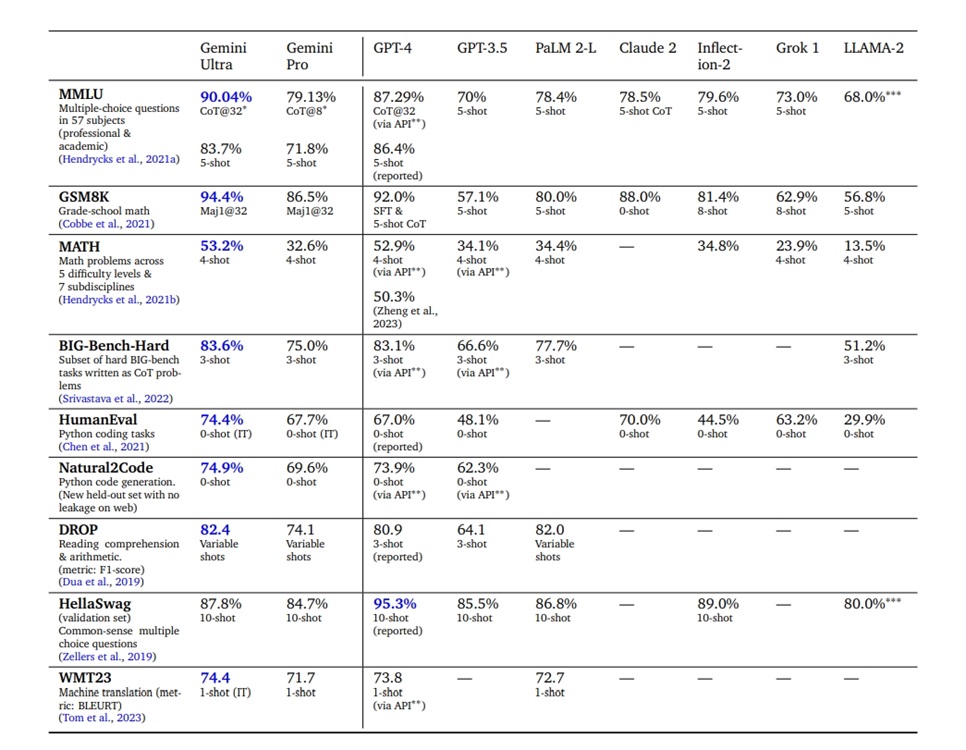

Now, let’s look at some benchmark numbers and find out whether Google has managed to outrank OpenAI with Gemini’s release. According to Google, Gemini Ultra beats the GPT-4 model on 30 out of the 32 benchmark tests generally used to evaluate LLM performance. Google is touting Gemini Ultra’s highest score of 90.04% score on the popular MMLU benchmark test, in which GPT-4 scored 86.4%. It even outperforms human experts (89.8%) on the MMLU benchmark.

On Gemini Ultra’s MMLU benchmark number, criticism from many quarters has poured in. Google has managed to get a score of 90.04% with CoT@32 (Chain-of-Thought) prompting to get accurate responses. With the standard 5-shot prompting, Gemini Ultra’s score is reduced to 83.7%, and GPT-4 score stands at 86.4%, making GPT-4 still the highest scorer in the MMLU test.

While it doesn’t diminish Gemini Ultra’s capability, it means better prompting is required to elicit accurate responses from the model.

With the standard 5-shot prompting, Gemini Ultra’s score is reduced to 83.7%, and GPT-4 score stands at 86.4%, making GPT-4 still the highest scorer in the MMLU test.

Moving to other benchmarks, in HumanEval (Python code generation), Gemini Ultra scores 74.4% whereas GPT-4 scores 67.0%. In the HellaSwag test which is used to evaluate commonsense reasoning, Gemini Ultra (87.8%) loses to GPT-4 (95.3%). In the Big-Bench Hard benchmark which tests challenging multi-step reasoning tasks, Gemini Ultra (83.6%) edges out GPT-4 (83.1%).

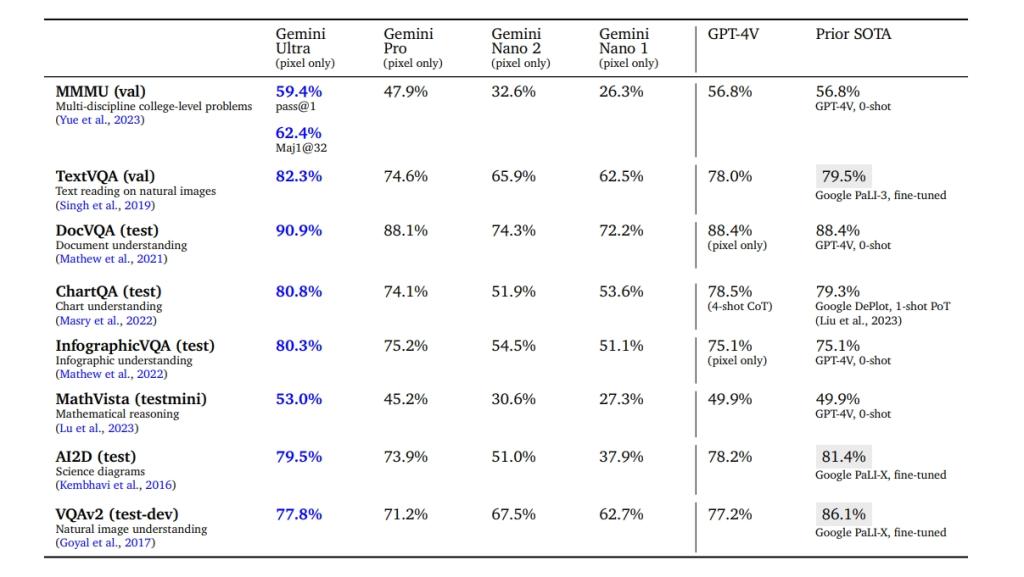

Moving to multimodal tests, Gemini Ultra wins against GPT-4V (Vision) on almost all counts. In the MMMU test, Gemini Ultra scores 59.4% and GPT-4V scores 56.8%. In natural image understanding (VQAv2 test), Gemini Ultra scores 77.8% and GPT-4V scores 77.2%. Next, in the OCR test on natural images (TextVQA), Gemini Ultra scores 82.3% and GPT-4V scores 78%. In the document understanding test (DocVQA), Gemini Ultra scores 90.9% and GPT-4V scores 88.4%. Finally, in Infographic understanding, Gemini Ultra scores 80.3% and GPT-4V scores 75.1%.

You can find more in-depth comparisons between Gemini Ultra and GPT-4 in the research paper released by Google Deepmind. The key takeaway from the benchmark numbers is that Google has indeed come up with a capable model that can compete against the best LLMs out there including GPT-4. And in terms of multimodal capability, Google seems to be back in the business.

Gemini AI: Safety Checks in Place

When it comes to AI safety, Google always espouses its “bold and responsible” adage. And the Google Deepmind team is following the same principle. Google says it has done both internal and external testing of the models before releasing them to the public.

It has set proactive policies around the Gemini models to check for bias and toxicity in user input and response. The Gemini models can still hallucinate but to a much lesser degree.

It has also red-teamed with external companies like MLCommons to evaluate AI systems. Google is also building a Secure AI Framework (SAIF) for the industry to mitigate risks associated with AI systems. The company is currently doing safety checks for its powerful Gemini Ultra model, and it will be released early next year once all the checks are done.

Verdict: The Gemini AI Era is Here

Although Google was caught off guard a year ago when ChatGPT was released, it seems like Google has finally caught up with OpenAI with the Gemini models. The Ultra model, in particular, is impressive, and we can’t wait to test it out, irrespective of some sketchy benchmark numbers. Its multimodal visual capability is remarkable and the coding performance is top-notch, from what we can see in the research paper.

The Gemini models are quite different from what we have seen so far from Google. They feel more like AI systems built from scratch. That said, OpenAI might come out with GPT-5 when Google releases the Gemini Ultra model early next year, which will again put Google in a race against time. Nevertheless, what do you think about Google’s new Gemini AI models? Share your thoughts in the comment section below.