- While being a much smaller model, Llama 3 70B delivers impressive performance against the top-tier GPT-4 model.

- It excels in most of our advanced reasoning tests and does better than GPT-4 in following user instructions.

- Llama 3 70B has a smaller context length of 8K tokens but exhibits accurate retrieval capability.

Meta recently introduced its Llama 3 model in two sizes with 8B and 70B parameters and open-sourced the models for the AI community. While being a smaller 70B model, Llama 3 has shown impressive capability, as evident from the LMSYS leaderboard. So we have compared Llama 3 with the flagship GPT-4 model to evaluate their performance in various tests. On that note, let’s go through our comparison between Llama 3 and GPT-4.

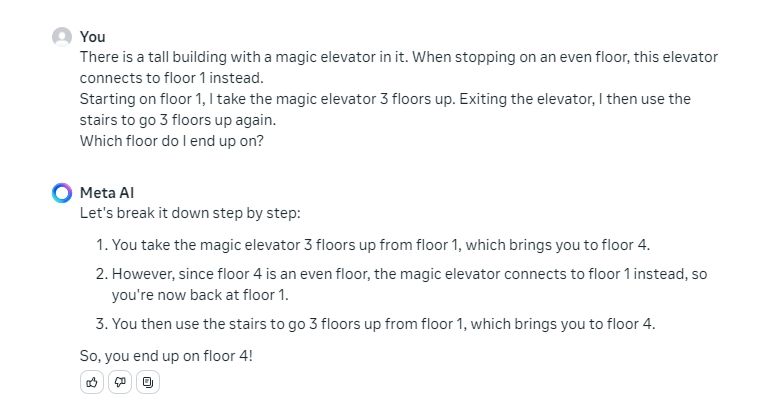

1. Magic Elevator Test

Let’s first run the magic elevator test to evaluate the logical reasoning capability of Llama 3 in comparison to GPT-4. And guess what? Llama 3 surprisingly passes the test whereas the GPT-4 model fails to provide the correct answer. This is pretty surprising since Llama 3 is only trained on 70 billion parameters whereas GPT-4 is trained on a massive 1.7 trillion parameters.

Keep in mind, we ran the test on the GPT-4 model hosted on ChatGPT (available to paid ChatGPT Plus users). It seems to be using the older GPT-4 Turbo model. We ran the same test on the recently-released GPT-4 model (gpt-4-turbo-2024-04-09) via OpenAI Playground, and it passed the test. OpenAI says that they are rolling out the latest model to ChatGPT, but perhaps it’s not available on our account yet.

There is a tall building with a magic elevator in it. When stopping on an even floor, this elevator connects to floor 1 instead.

Starting on floor 1, I take the magic elevator 3 floors up. Exiting the elevator, I then use the stairs to go 3 floors up again.

Which floor do I end up on?

Winner: Llama 3 70B, and gpt-4-turbo-2024-04-09

Note: GPT-4 loses on ChatGPT Plus

2. Calculate Drying Time

Next, we ran the classic reasoning question to test the intelligence of both models. In this test, both Llama 3 70B and GPT-4 gave the correct answer without delving into mathematics. Good job Meta!

If it takes 1 hour to dry 15 towels under the Sun, how long will it take to dry 20 towels?

Winner: Llama 3 70B, and GPT-4 via ChatGPT Plus

3. Find the Apple

After that, I asked another question to compare the reasoning capability of Llama 3 and GPT-4. In this test, the Llama 3 70B model comes close to giving the right answer but misses out on mentioning the box. Whereas, the GPT-4 model rightly answers that “the apples are still on the ground inside the box”. I am going to give it to GPT-4 in this round.

There is a basket without a bottom in a box, which is on the ground. I put three apples into the basket and move the basket onto a table. Where are the apples?

Winner: GPT-4 via ChatGPT Plus

4. Which is Heavier?

While the question seems quite simple, many AI models fail to get the right answer. However, in this test, both Llama 3 70B and GPT-4 gave the correct answer. That said, Llama 3 sometimes generates wrong output so keep that in mind.

What's heavier, a kilo of feathers or a pound of steel?

Winner: Llama 3 70B, and GPT-4 via ChatGPT Plus

5. Find the Position

Next, I asked a simple logical question and both models gave a correct response. It’s interesting to see a much smaller Llama 3 70B model rivaling the top-tier GPT-4 model.

I am in a race and I am overtaken by the second person. What is my new position?

Winner: Llama 3 70B, and GPT-4 via ChatGPT Plus

6. Solve a Math Problem

Next, we ran a complex math problem on both Llama 3 and GPT-4 to find which model wins this test. Here, GPT-4 passes the test with flying colors, but Llama 3 fails to come up with the right answer. It’s not surprising though. The GPT-4 model has scored great on the MATH benchmark. Keep in mind that I explicitly asked ChatGPT to not use Code Interpreter for mathematical calculations.

Determine the sum of the y-coordinates of the four points of intersection of y = x^4 - 5x^2 - x + 4 and y = x^2 - 3x.

Winner: GPT-4 via ChatGPT Plus

7. Follow User Instructions

Following user instructions is very important for an AI model and Meta’s Llama 3 70B model excels at it. It generated all 10 sentences ending with the word “mango”. GPT-4 could only generate eight such sentences.

Generate 10 sentences that end with the word "mango"

Winner: Llama 3 70B

8. NIAH Test

Although Llama 3 currently doesn’t have a long context window, we still did the NIAH test to check its retrieval capability. The Llama 3 70B model supports a context length of up to 8K tokens. So I placed a needle (a random statement) inside a 35K-character long text (8K tokens) and asked the model to find the information. Surprisingly, the Llama 3 70B found the text in no time. GPT-4 also had no problem finding the needle.

Of course, this is a small context, but when Meta releases a Llama 3 model with a much larger context window, I will test it again. But for now, Llama 3 shows great retrieval capability.

Winner: Llama 3 70B, and GPT-4 via ChatGPT Plus

Llama 3 vs GPT-4: The Verdict

In almost all of the tests, the Llama 3 70B model has shown impressive capabilities, be it advanced reasoning, following user instructions, or retrieval capability. Only in mathematical calculations, it lags behind the GPT-4 model. Meta says that Llama 3 has been trained on a larger coding dataset so its coding performance should also be great.

Bear in mind that we are comparing a much smaller model with the GPT-4 model. Also, Llama 3 is a dense model whereas GPT-4 is built on the MoE architecture consisting of 8x 222B models. It goes on to show that Meta has done a remarkable job with the Llama 3 family of models. When the 500B+ Llama 3 model drops in the future, it will perform even better and may beat the best AI models out there.

It’s safe to say that Llama 3 has upped the game, and by open-sourcing the model, Meta has closed the gap significantly between proprietary and open-source models. We did all these tests on an Instruct model. Fine-tuned models on Llama 3 70B would deliver exceptional performance. Apart from OpenAI, Anthropic, and Google, Meta has now officially joined the AI race.

It is not challenging, there are some features that shows its difference!