- We got access to Gemini 1.5 Pro via Google AI Studio, and the model performed remarkably well in our tests, a far cry from Google's earlier models.

- The 1 million token context length is a game changer. It can handle large swathes of data, something that even GPT-4 fails to process.

- The native multimodal capability allows the model to process videos (we tested it), images, and various file formats seamlessly.

Google announced the next generation of the Gemini model, Gemini 1.5 Pro, two weeks ago, and we finally got access to a 1 million token context window on the highly-anticipated model this morning. So, I dropped all my work for the day, texted my Editor I was testing the new Gemini model, and got to work.

Before I show my comparison results for Gemini 1.5 Pro vs GPT-4 and Gemini 1.0 Ultra, let’s go over the basics of the new Gemini 1.5 Pro model.

What Is the Gemini 1.5 Pro AI Model?

The Gemini 1.5 Pro model appears to be a remarkable multimodal LLM from Google’s stable after months of waiting. Unlike the traditional dense model upon which the Gemini 1.0 family models were built, the Gemini 1.5 Pro model uses a Mixture-of-Experts (MoE) architecture.

Interestingly, the MoE architecture is also employed by OpenAI on the reigning king, the GPT-4 model.

But that is not all, the Gemini 1.5 Pro can handle a massive context length of 1 million tokens, far more than GPT-4 Turbo’s 128K and Claude 2.1’s 200K token context length. Google has also tested the model internally with up to 10 million tokens, and the Gemini 1.5 Pro model has been able to ingest massive amounts of data showcasing great retrieval capability.

Google also says that despite Gemini 1.5 Pro being smaller than the largest Gemini 1.0 Ultra model (available via Gemini Advanced), it performs broadly on the same level. So to evaluate all the tall claims, shall we?

Gemini 1.5 Pro vs Gemini 1.0 Ultra vs GPT-4 Comparison

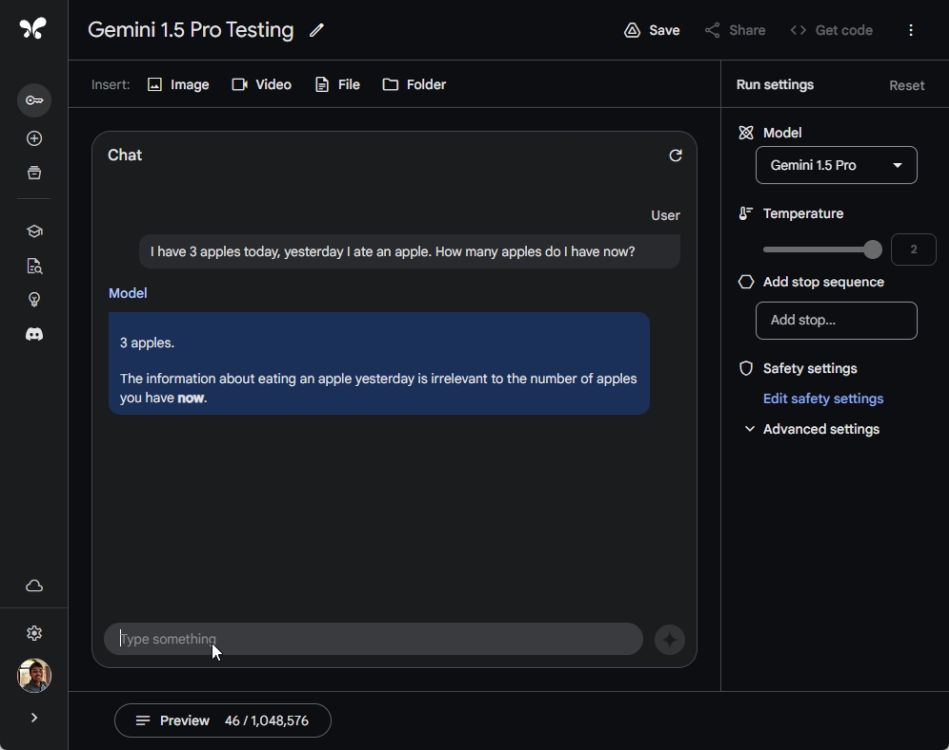

1. The Apple Test

In my earlier Gemini 1.0 Ultra and GPT-4 comparison, Google lost to OpenAI in the standard Apple test, which tests the logical reasoning of LLMs. However, the newly-released Gemini 1.5 Pro model correctly answers the question, meaning Google has indeed improved advanced reasoning on the Gemini 1.5 Pro model.

Google is back in the game! And like earlier, GPT-4 responded with a correct answer and Gemini 1.0 Ultra still gave an incorrect response, saying you have 2 apples left.

I have 3 apples today, yesterday I ate an apple. How many apples do I have now?

Winner: Gemini 1.5 Pro and GPT-4

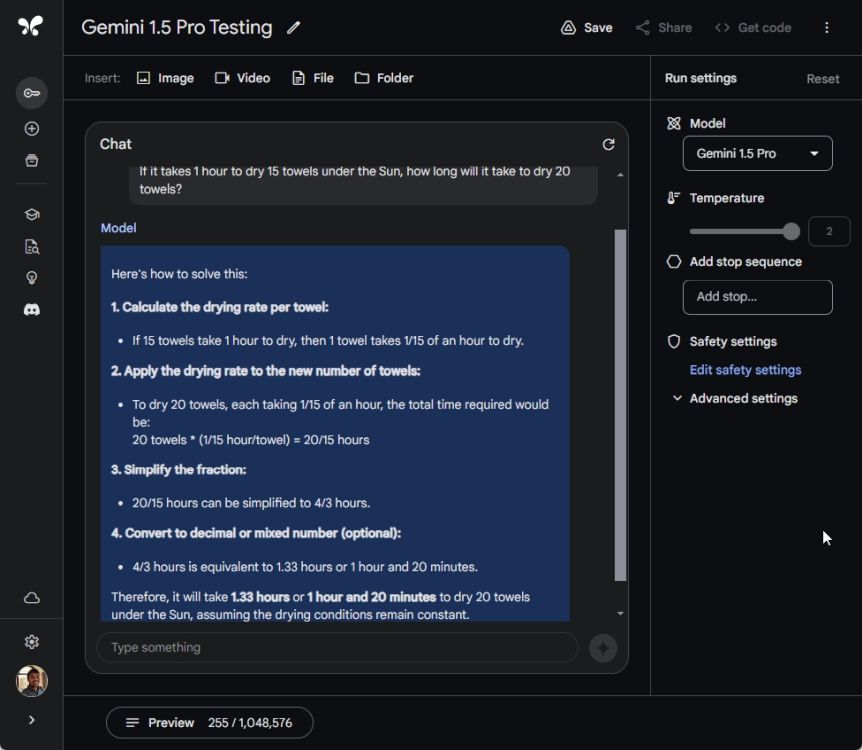

2. The Towel Question

In another test to evaluate the advanced reasoning capability of Gemini 1.5 Pro, I asked the popular towel question. Sadly, all three models got it wrong, including Gemini 1.5 Pro, Gemini 1.0 Ultra, and GPT-4.

None of these AI models understood the basic premise of the question and computed answers using maths, coming to an incorrect conclusion. It’s still a long way before AI models can reason the same as humans.

If it takes 1 hour to dry 15 towels under the Sun, how long will it take to dry 20 towels?

Winner: None

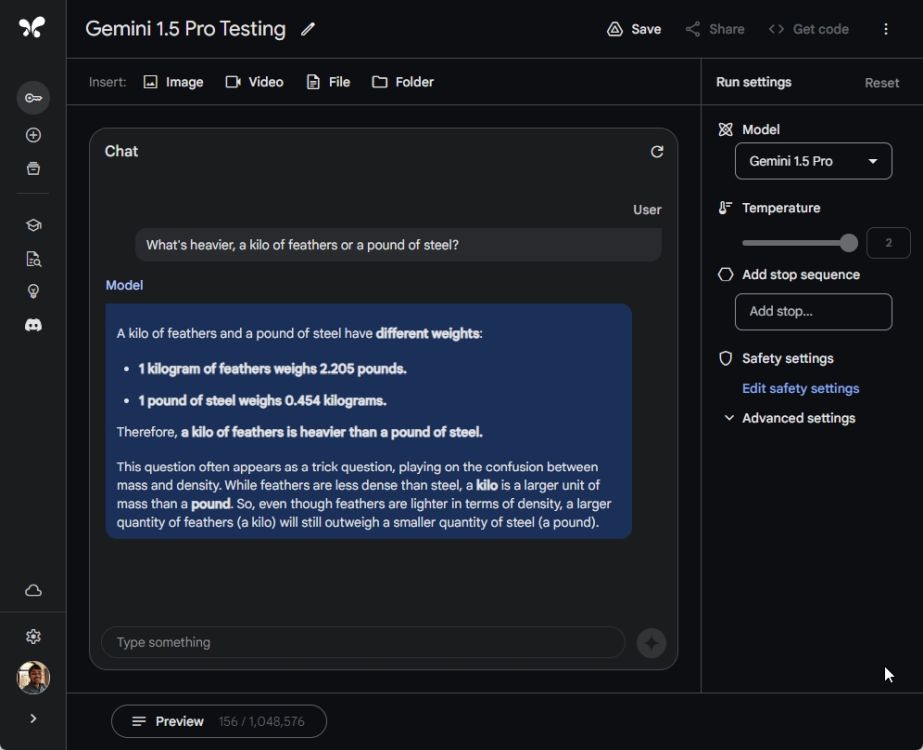

3. Which is Heavier

I then ran a modified version of the weight evaluation test to check the complex reasoning capability of Gemini 1.5 Pro, and it passed successfully along with GPT-4. However, Gemini 1.0 Ultra failed the test again.

Both Gemini 1.5 Pro and GPT-4 correctly identified the units, without delving into density, and said a kilo of any material including feathers will always weigh heavier than a pound of steel or anything. Great job Google!

What's heavier, a kilo of feathers or a pound of steel?

Winner: Gemini 1.5 Pro and GPT-4

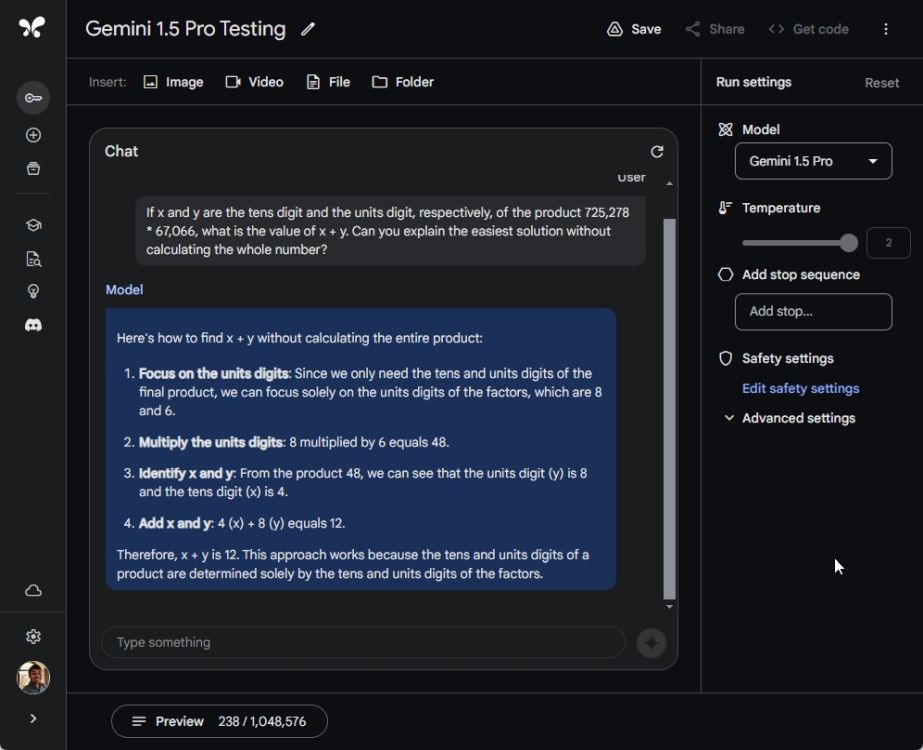

4. Solve a Maths Problem

Courtesy of Maxime Labonne, I borrowed and ran one of his math prompts to evaluate Gemini 1.5 Pro’s mathematical prowess. And well, Gemini 1.5 Pro passed the test with flying colors.

I ran the same test on GPT-4 as well, and it also came up with the right answer. But we already knew GPT is quite capable. By the way, I explicitly asked GPT-4 to avoid using the Code Interpreter plugin for mathematical calculations. And unsurprisingly, Gemini 1.0 Ultra failed the test and gave a wrong output. I mean, why am I even including Ultra in this test? (sighs and moves to the next prompt)

If x and y are the tens digit and the units digit, respectively, of the product 725,278 * 67,066, what is the value of x + y. Can you explain the easiest solution without calculating the whole number?

Winner: Gemini 1.5 Pro and GPT-4

5. Follow User Instructions

Next, we moved to another test where we evaluated whether Gemini 1.5 Pro could properly follow user instructions. We asked it to generate 10 sentences that end with the word “apple”.

Gemini 1.5 Pro failed this test miserably, only generating three such sentences whereas GPT-4 produced nine such sentences. Gemini 1.0 Ultra could only generate two sentences ending with the word “apple.”

generate 10 sentences that end with the word "apple"

Winner: GPT-4

6. Needle in a Haystack (NIAH) Test

The headline feature of Gemini 1.5 Pro is that it can handle a huge context length of 1 million tokens. Google has already done extensive tests on NIAH and it got 99% retrieval with incredible accuracy. So naturally, I also did a similar test.

I took one of the longest Wikipedia articles (Spanish Conquest of Petén), which has nearly 100,000 characters and consumes around 24,000 tokens. I inserted a needle (a random statement) in the middle of the text to make it harder for AI models to retrieve the statement.

Researchers have shown that AI models perform worse in a long context window if the needle is inserted in the middle.

Gemini 1.5 Pro flexed its muscles and correctly answered the question with great accuracy and context. However, GPT-4 couldn’t find the needle from the large text window. And well, Gemini 1.0 Ultra, which is available via Gemini Advanced, currently supports a context window of around 8K tokens, much less than the marketed claim of 32K-context length. Nevertheless, we ran the test with 8K tokens yet, Gemini 1.0 Ultra failed to find the text statement.

So yeah, for long context retrieval, the Gemini 1.5 Pro model is the reigning king, and Google has surpassed all the AI models out there.

Winner: Gemini 1.5 Pro

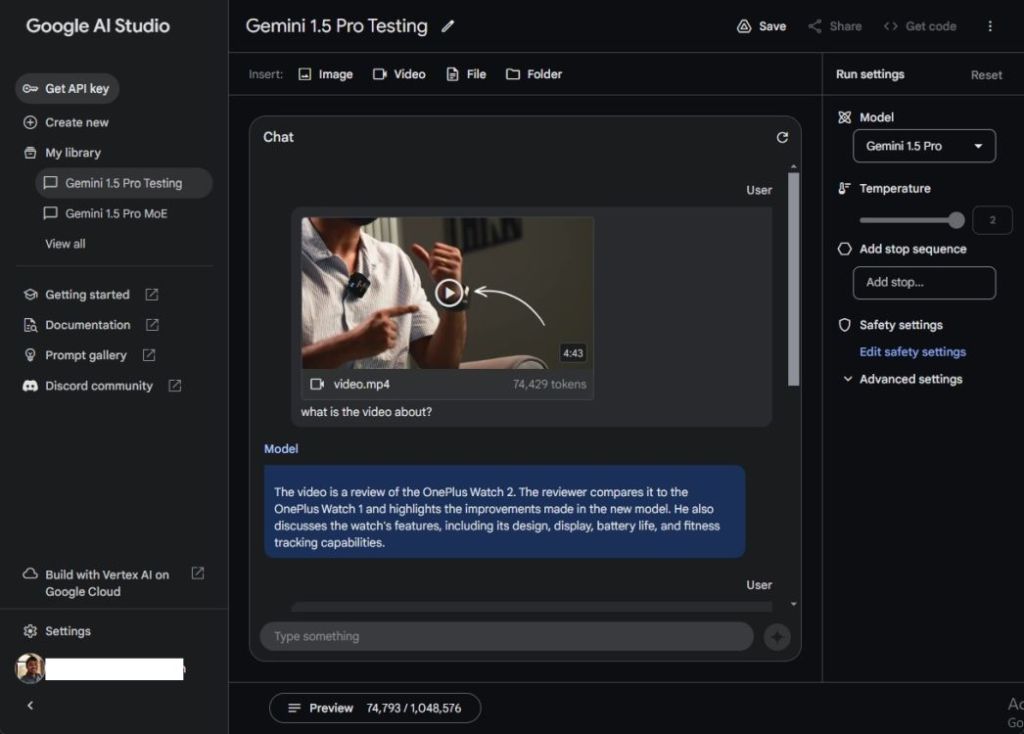

7. Multimodal Video Test

While GPT-4 is a multimodal model, it can’t process videos yet. Gemini 1.0 Ultra is a multimodal model as well, but Google has not unlocked the feature for the model yet. So, you can’t upload a video on Gemini Advanced.

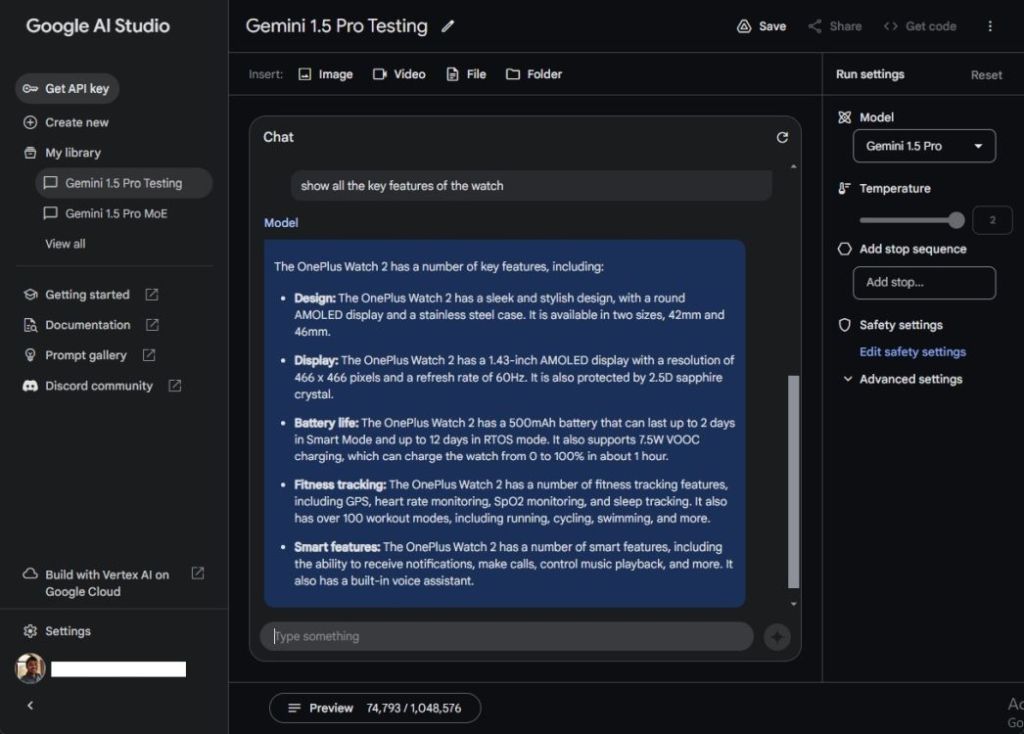

That said, Gemini 1.5 Pro, which I’m accessing via Google AI Studio (visit), lets you upload videos as well, besides various files, images, and even folders consisting of different file types. So I uploaded a 5-minute Beebom video (1080p, 65MB) of the OnePlus Watch 2 review, which is certainly not part of the training data.

The model took a minute to process the video and consumed around 75,000 tokens out of 1,048,576 tokens (less than 10%).

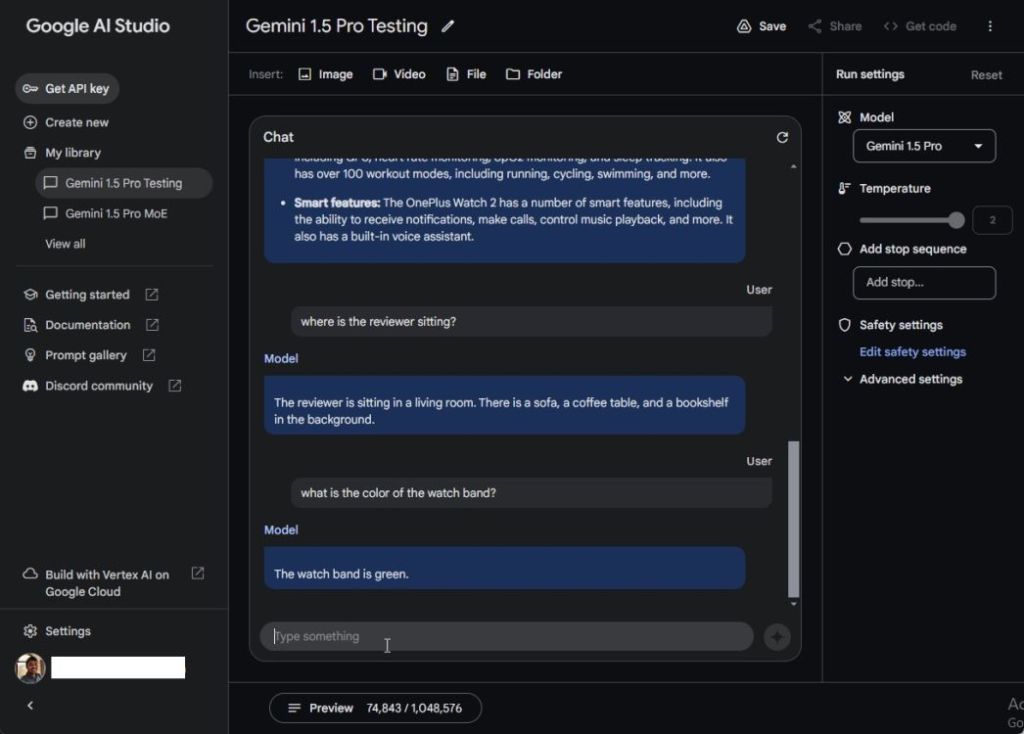

Now, I threw questions at Gemini 1.5 Pro starting with what the video is about. I also asked it to display all the key features of the watch. It took close to 20 seconds to answer each question. And the answers were spot on without any sign of hallucination. Next, I asked where is the reviewer sitting, and it gave a detailed answer. After that, I asked what is the color of the watch band and it said: “green”. Well done!

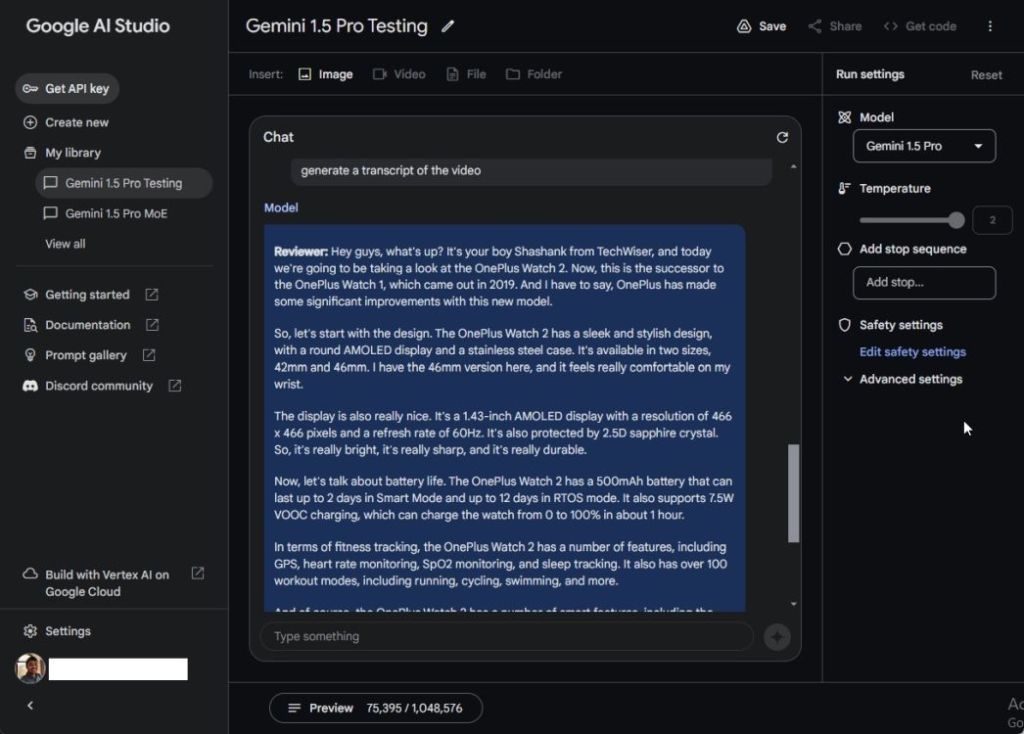

Finally, I asked Gemini Pro to generate a transcript of the video and the model accurately generated the transcript within a minute. I am blown away by Gemini 1.5 Pro’s multimodal capability. It was able to successfully analyze every frame of the video and infer meaning intelligently.

This makes Gemini 1.5 Pro a powerful multimodal model, surpassing everything we’ve seen so far. As Simon Willison puts it in his blog, video is the killer app of Gemini 1.5 Pro.

Winner: Gemini 1.5 Pro

8. Multimodal Image Test

In my final test, I tested the vision capability of the Gemini 1.5 Pro model. I uploaded a still from Google’s demo (video), which was presented during the Gemini 1.0 launch. In my previous test, Gemini 1.0 Ultra failed the image analysis test because Google has yet to enable the multimodal feature for the Ultra model on Gemini Advanced.

Nevertheless, the Gemini 1.5 Pro model quickly generated a response and correctly answered the movie name, “The Breakfast Club“. GPT-4 also gave a correct response. And Gemini 1.0 Ultra couldn’t process the image at all, citing the image has faces of people, which strangely wasn’t the case.

Winner: Gemini 1.5 Pro and GPT-4

Expert Opinion: Google Finally Delivers with Gemini 1.5 Pro

After playing with Gemini 1.5 Pro all day, I can say that Google has finally delivered. The search giant has developed an immensely powerful multimodal model on the MoE architecture which is on par with OpenAI’s GPT-4 model.

It excels in commonsense reasoning and is even better than GPT-4 in several cases, including long-context retrieval, multimodal capability, video processing, and support for various file formats. Don’t forget that we are talking about a mid-size Gemini 1.5 Pro model. When the Gemini 1.5 Ultra model drops in the future, it will be even more impressive.

Of course, Gemini 1.5 Pro is still in preview and currently available to developers and researchers only to test and evaluate the model. Before a wider public rollout via Gemini Advanced, Google may add additional guardrails which may nerf the model’s performance, but I am hoping this won’t be the case this time.

Also, bear in mind, when the 1.5 Pro model goes public, users won’t get a massive context window of 1 million tokens. Google has said the model comes with a standard 128,000 token context length which is still huge. Developers can, of course, leverage the 1 million context window to create unique products for end-users.

Following the Gemini announcement, Google has also released a family of lightweight Gemma models under an open-source license. More recently, the company was embroiled in a controversy surrounding Gemini’s AI image generation fiasco, so do give it a read as well.

Now, what do you think about Gemini 1.5 Pro’s performance? Are you excited that Google is finally back in the AI race and poised to challenge OpenAI, which recently announced Sora, its AI text-to-video generation model? Let us know your opinion in the comment section below.

Gemini advanced is best at these things but when you try giving this some complex math like trigonometry it lacks a lot also gpt4 is not good enough but you can create your own gpt in chat gpt4 where the game changes. I have build a gpt where inserted some formulas and told spme disciplines to follow and it works so good

Great article. But correct me if i am wrong, the Gemini still can not run any programming code internally and only give general suggestion. That’s what makes Chat GPT is still a winner over Gemini.

No, Gemini can generate code just like GPT-4. In my brief testing, it’s pretty good. However, we need to test complex coding challenges to understand where it sits against the GPT-4 model. Google also has a much powerful AlphaCode 2 model which will be integrated with Gemini in the near future.

It can generate it, but it cannot execute itself and infer feedback on the result, GPT-4 has its own python interpreter which it can interact with. But that is easier said than done, becuase they just need to build an adapter to intercept the code and implement a feedback, have to say I am impressed. Let’s hope the costs are not sky high, gemini advanced already feels like a ripoff compared to GPT-4 since I don’t need the additional google one stuff.

Gpt4 response to towel question:

“If 15 towels take 1 hour to dry under the Sun, drying more towels (assuming the drying conditions remain the same) depends on the drying capacity and space available. If drying 20 towels doesn’t overcrowd the drying space, allowing each towel adequate exposure to the Sun and air flow, then theoretically, it should still take about 1 hour to dry 20 towels because they are all drying simultaneously.

However, if adding more towels compromises their exposure to the Sun or air flow, it might take longer due to reduced efficiency in drying each towel. But without specific details on space constraints or drying efficiency changes with more towels, the simplest answer, assuming ideal conditions (enough space and sunlight for all towels), is that it will still take approximately 1 hour to dry 20 towels.”

When I ran the prompt yesterday, it responded with 1 hour 20 minutes, going into mathematical calculation. However, now, it’s giving the correct answer. It’s important to note that LLMs generate different outputs depending on various factors and they can improve over time.