- Nvidia has released a new Chat with RTX tool that can run locally, even offline without the internet.

- Make your own AI chatbot with this Nvidia app, feeding it data like YouTube videos, Word documents, and PDFs.

- RTX 30 or RTX 40 Series GPU is required with at least 8GB VRAM. Additionally, 16GB of RAM is needed to run this tool.

If your PC is powered by a relatively new Nvidia graphics card, you can try out the company’s latest AI tool called Chat with RTX. It brings generative AI features to Windows PCs, relying on “Nvidia RTX acceleration,” among other things.

Chat with RTX is touted to be “local, fast, custom generative AI” for Windows PCs. As a result, user privacy on this kind of AI chatbot will be much better as compared to options like Bing Chat, Google Bard, and ChatGPT. Now, if you’re wondering if Nvidia’s Chat with RTX tool works offline, yeah, it does!

There is a certain advantage to using a locally run AI chatbot like this on your PC as compared to cloud-based AI chatbots typically used by everyone today. Nvidia says “provided results are fast — and the user’s data stays on the device.”

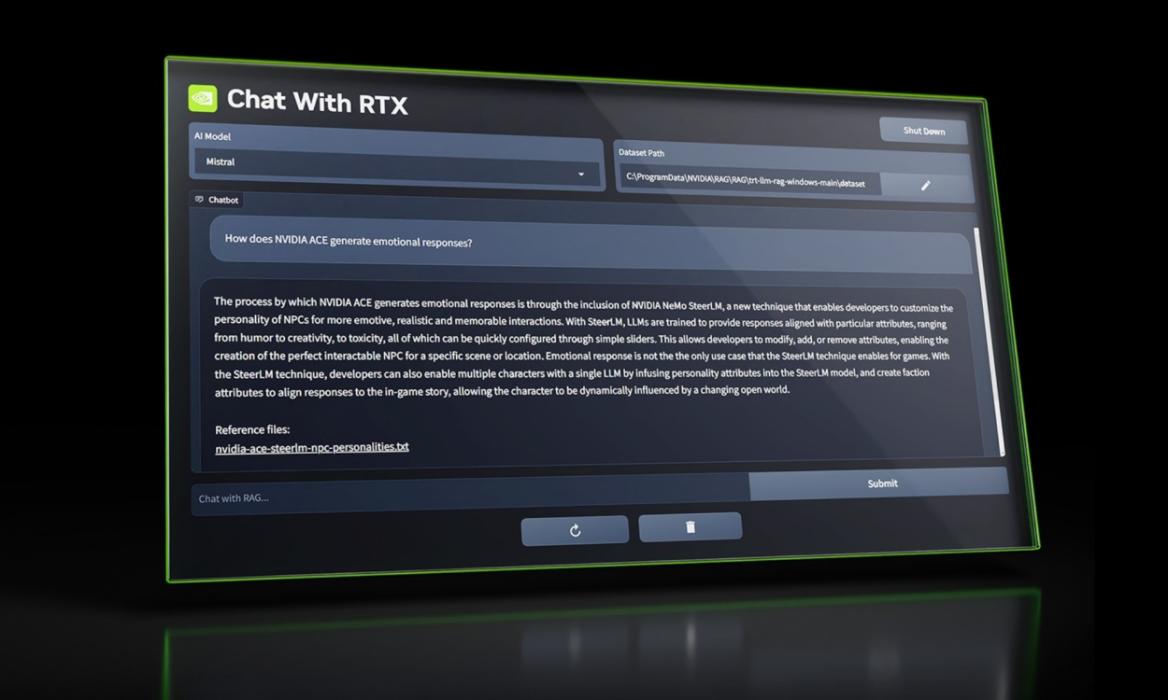

You can give it your own dataset, placing local files on your PC. Various extensions like PDF files, Notepad text files, and Word documents are supported. By the way, you can even provide this AI chatbot knowledge from YouTube videos by adding the video link to the software’s settings!

Nvidia Chat with RTX relies on retrieval-augmented generation (RAG), which is used to improve the accuracy of LLMs. It also uses TensorRT-LLM software and your GPU hardware to function on your Windows 10 and 11 PC. After feeding Chat with RTX various documents, notes, and videos, you can use it to interact with the information through a simple AI chatbot interface!

Chat with RTX: System Requirements & Where to Download

Since it is an AI chatbot that you run locally, the download size is quite high at 35.1GB at the time of writing. Two large language models are included in the download, Mistral and Llama 2. You can download the Chat with RTX from Nvidia’s website here.

After the download is complete, you can extract the compressed archive and then proceed to install Chat with RTX. However, there are certain prerequisites that you need to fulfill.

Windows PCs powered by Nvidia GeForce RTX graphics cards can run the new Chat with RTX AI tool as long as they meet certain hardware requirements. Nvidia says this is a “demo app,” so there may be a more featureful version coming later. System requirements for the Chat with RTX AI tool are:

- 16GB RAM (or more)

- Nvidia RTX 30 series or RTX 40 Series graphics card (with 8GB+ VRAM capacity)

- GeForce Driver v535.11 or later

- Windows 10 or 11

What are your thoughts on Nvidia’s new AI chatbot? Have you tried it out yet? Let us know in the comments below! It is super appealing to run your own AI chatbot powered by the GeForce RTX graphics card, and we are going to be testing this tool in the coming days.