While facial recognition AI is already helping law enforcement authorities nab suspects from crowds, isolating audio signals from a noisy environment is quite a challenge, and is borderline impossible in certain cases. A huge drawback in audio signal separation is the inherent nature of sound waves, which distort easily in loud spaces with multiple audio sources. However, Google has developed a new AI system which can separate an audio signal into its individual speech sources and isolate the sound created by each source from the background noise.

Despite inherently being an audio-related technology, Google’s AI-based sound isolation system employs an audio-visual speech separation technique, which allows the separation and amplification of sound from a singular source from the background audio disturbance.

“We believe this capability can have a wide range of applications, from speech enhancement and recognition in videos, through video conferencing, to improved hearing aids, especially in situations where there are multiple people speaking”, read Google’s official research blog post regarding the landmark AI system’s application scenarios.

What is unique about Google’s AI speech system is the fact that it studies the auditory as well as visual signals of a scene/video and analyses the lip movements of the subject to more accurately separate their individual audio signals from their surroundings, especially in cases where there are multiple speakers.

To train the AI model, Google employed machine learning techniques, and collected over 100,000 high-quality samples worth over 2,000 hours of lectures and talk videos from YouTube, all of them with minimal noise, to train the system about the critical correlation between an audio signal and the corresponding lip gesture.

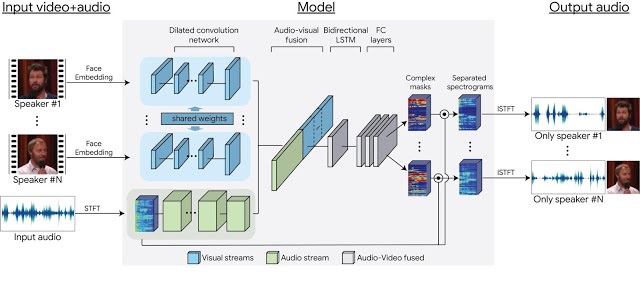

The videos were then used to create synthetic video files with a mixture of facial data and sound signals to create a dataset for “training a multi-stream convolutional neural network-based model to split the synthetic cocktail mixture into separate audio streams for each speaker in the video.”

The system eventually learned how to encode visual and audio signals, fuse them and create a time-frequency mask for each speaker, so that when the need arises, the fused dataset can simply be multiplied by the raw noise spectrogram and converted into a clean audio signal generated by any speaker in real time. Thanks to the AI system, one can just select a speaker in a group of people and suppress the entire group’s sound output to get a clear audio from the selected subject.

That does sound spooky, considering how much of Google’s technology is already used by military and intelligence for surveillance. But yes, what it also means is in the future, your Google Home may be able to listen to commands spoken near it, even at a noisy party.