- Qualcomm AI Hub finally brings support for over 75 optimized AI models to run on Snapdragon platforms including smartphones and PCs.

- It can run a 7B AI model locally to generate texts and images, perform image segmentation, speech recognition, and more. It supports multimodal AI models too.

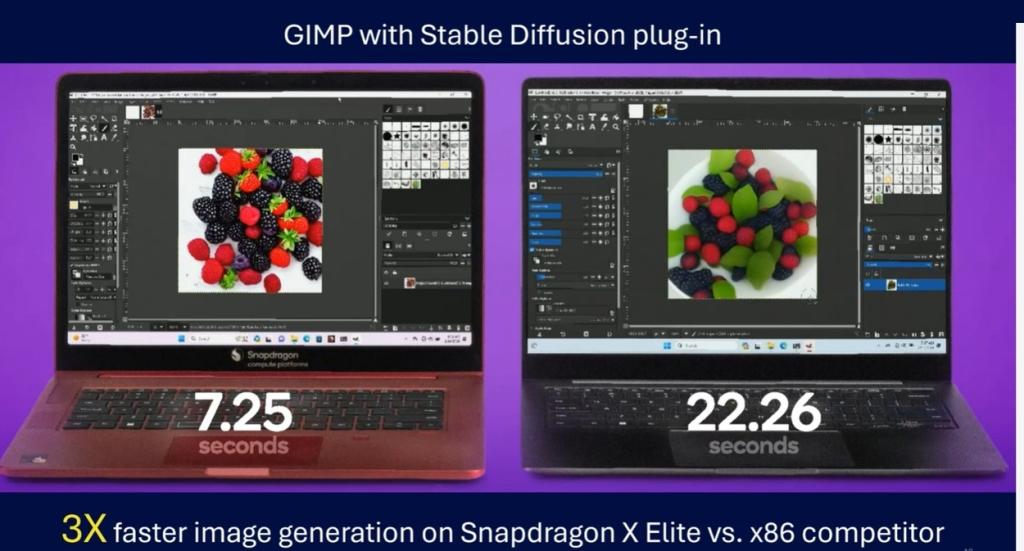

- The upcoming Snapdragon X Elite chipset delivers 3x to 4x better performance than the latest x86-powered Intel Core Ultra chipsets.

At MWC 2024, Qualcomm has finally launched its AI Hub to deliver on-device AI experiences on several devices. The American chip maker says its AI engine is powerful enough to offer intelligent computing on all platforms, from smartphones to PCs.

Qualcomm AI Hub has a library of fully optimized AI models you can download and run locally on your device. It also supports many popular AI frameworks like Google’s TensorFlow and Meta AI’s PyTorch, as part of Qualcomm AI Stack.

Qualcomm AI Hub Has Optimized Over 75 AI Models

Qualcomm says its AI Hub has over 75 optimized AI models and supports AI inferencing up to 4x than the competition. You can use these AI models for various tasks including image segmentation, image generation, text generation, low light enhancement, super-resolution, speech recognition, and more. In tandem, Qualcomm AI Hub can power multimodal AI models that can see, hear, and speak locally.

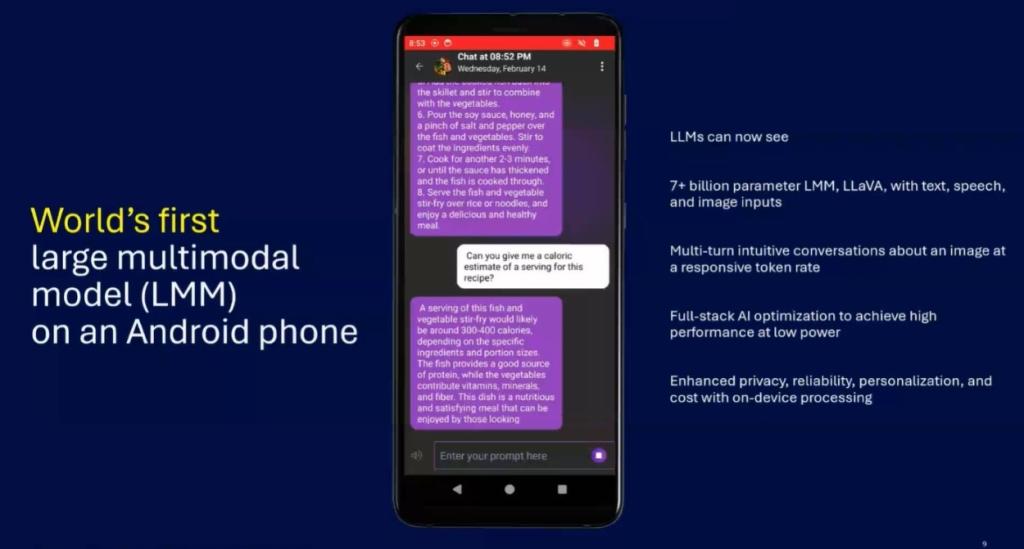

The chip giant mentioned that it can run multimodal AI models with 7B+ parameters on Android devices locally. It can run models like LLaVa with text, speech, and image output support. The whole software stack has been optimized to deliver the best AI performance while consuming minimum power. Since everything is done locally, your privacy is preserved, and it also unlocks personalized AI experiences.

Even on Windows laptops running the upcoming Snapdragon X Elite chipset, you can input your voice, and it can reason with the speech and generate output in no time. Qualcomm has also employed a technique called LoRA (Low-Rank Adaptation) to generate AI images using Stable Diffusion locally on the device. It can run the Stable Diffusion model with 1B+ parameters.

Qualcomm vs Intel: NPU Performance

To further prove Snapdragon X Elite’s powerful on-device AI capability, Qualcomm did an image generation test on GIMP using the Stable Diffusion plugin. The Snapdragon X Elite-powered laptop generated an AI image in just 7.25 seconds whereas the latest x86-powered Intel Core UItra processor took 22.26 seconds.

It’s important to note that Intel launched the Meteor Lake architecture (powering Core Ultra CPUs) with various compute tiles, in which the NPU tile (built on Intel 4 node) is designed for on-device AI tasks. Despite that, the ARM-powered Snapdragon X Elite delivers 3x better AI performance, largely because its NPU can do 45 TOPS alone. And when combined with CPU, GPU, and NPU, it results in compute performance of up to 75 TOPS.

Finally, Qualcomm is also working to bring Hybrid AI experiences where AI processing tasks are distributed across local compute and cloud resources to offer better performance. You can check out Qualcomm AI Hub right here (visit).

So what do you think about Qualcomm AI Hub? Will it spur novel on-device generative AI experiences? Let us know in the comment section below.

Qualcomm doesn’t actually allow people to use the NPU feature, I tried and it told me my NPU was locked. So obviously it’s just something to get people to but into it without actually releasing it.