- Google released an open-source model called Gemma in two sizes. The 2B model weighs just 1.5GB so you can run it on your low-resource computer locally.

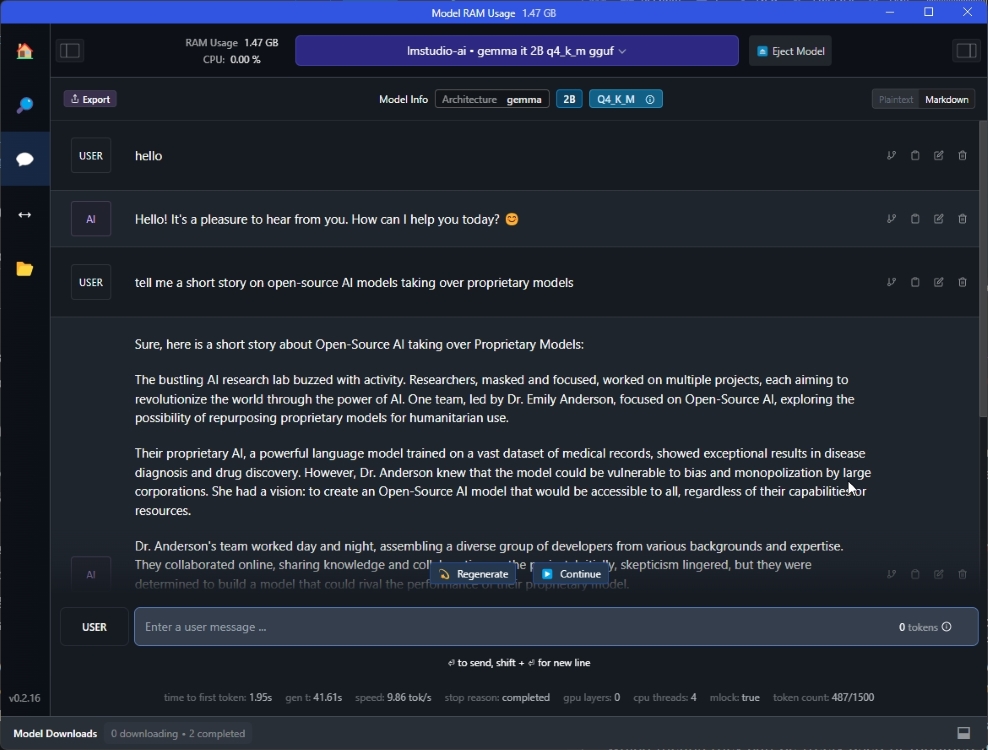

- The model consumes close to 1.4GB RAM and generates 10 tokens per second on a 10th-gen Intel i3-powered PC.

- You can use the model for creative tasks in English including text generation, summarization, and basic reasoning.

Just recently, Google introduced its first open-source AI model called Gemma in two sizes, Gemma 2B and 7B. The model is decent for creative tasks in English like text generation, summarization, basic reasoning, etc. Due to its small size, the model can be downloaded and locally used on a low-resource computer without an internet connection. So here is our tutorial to download and run the Google Gemma AI model on Windows, macOS, and Linux.

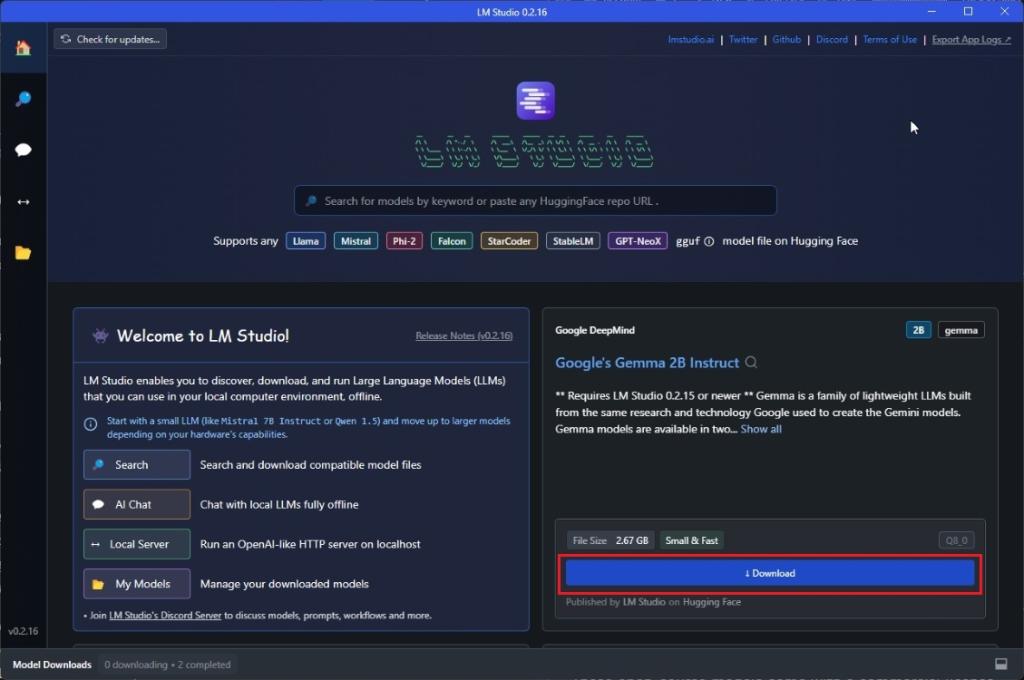

Download Google Gemma 2B Model

- Go ahead and download LM Studio (Free) on your computer. Next, launch the program.

- On the homepage, you will find the “Google’s Gemma 2B Instruct” model. You can also manually search for “Gemma”. Now, open it.

- Next, simply click on “Download”. It’s a 1.5GB file as the Gemma 2B model has been 4-bit quantized to compress the model size and reduce memory usage. If you have 8+ GB RAM, you can download the 8-bit quantized model (2.67GB) that will offer better performance.

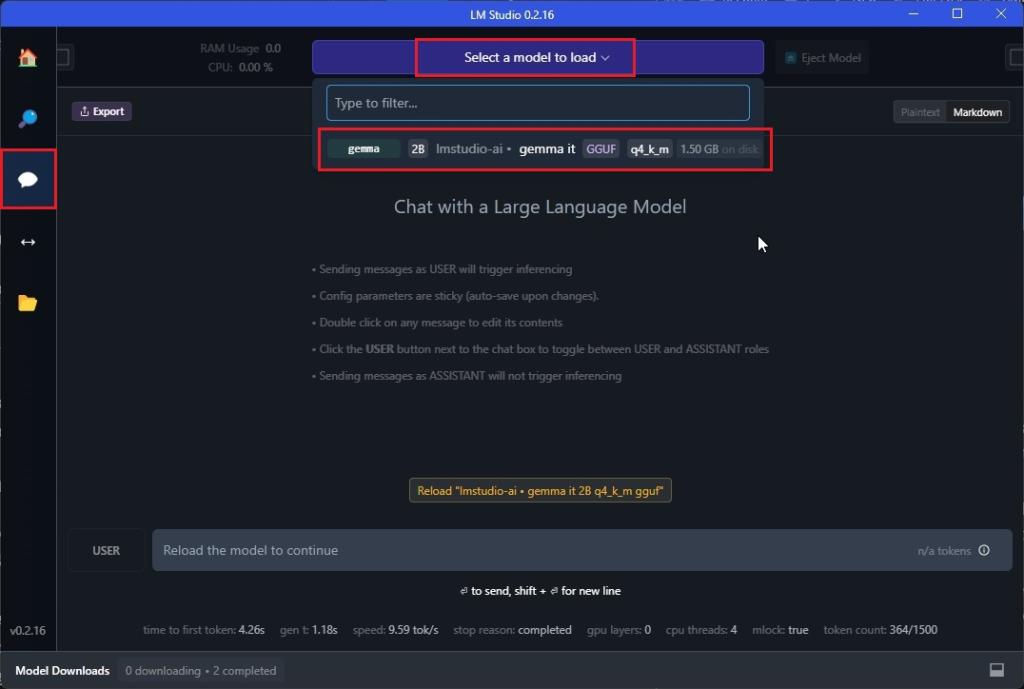

Run Google Gemma Offline Using LM Studio

- In LM Studio, move to the “Chat” window on the left side.

- After that, click on “Select a model to load” and choose “gemma”. It will consume about 1.4GB RAM.

- Now, go ahead and ask your questions. In my experience, the tiny 2B Gemma model is good for creative tasks in the English language. It refuses to answer anything of value where opinion on any subject is sought (e.g. is C better than Rust?).

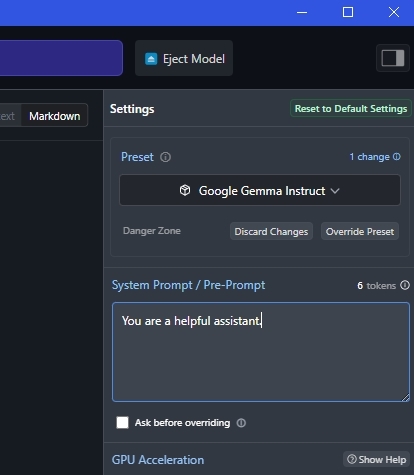

- You can also set a system prompt on the right to customize the behavior of the AI model.

So this is how you can download and run Google’s Gemma model on your PC, Mac, and Linux computer. While I find Google’s open-source model quite bland, it might be useful for people who are looking for a simple AI model to run locally.

Anyway, that is all from us. If you are looking for a true local AI assistant, I would strongly recommend checking out Open Interpreter. It works like ChatGPT’s Code Interpreter and performs actual tasks on your computer. Finally, if you have any questions, let us know in the comment section below.