Apple has silently removed details on its CSAM (Child Sexual Abuse Material) Detection feature from its website, giving us an inkling that it may have decided to abandon the feature completely after delaying it due to all the negative it has received. However, that might not be the case.

Apple’s CSAM Detection Scrapped Off?

Apple’s Child Safety page no longer mentions the CSAM Detection feature. The CSAM Detection, which has been a subject of controversy ever since it was announced in August, uses machine learning algorithms to detect sexually explicit content in a user’s iCloud Photos while maintaining users’ privacy. But, the feature was widely scrutinized as it hindered people’s privacy and raised concerns on how it could be misused easily.

While Apple has removed references to CSAM Detection, it isn’t scrapping off the feature and still aims to stick to its plans announced back in September, as per a statement given to The Verge. Apple, back in September, announced that it will delay the rollout of the feature based on feedback from “customers, advocacy groups, researchers, and others.”

In addition to this, Apple hasn’t removed supporting documents relating to CSAM Detection (about its functioning and FAQs), further implying that Apple plans to release the feature eventually. Hence, we can expect the feature to take its sweet time before it is made available for users.

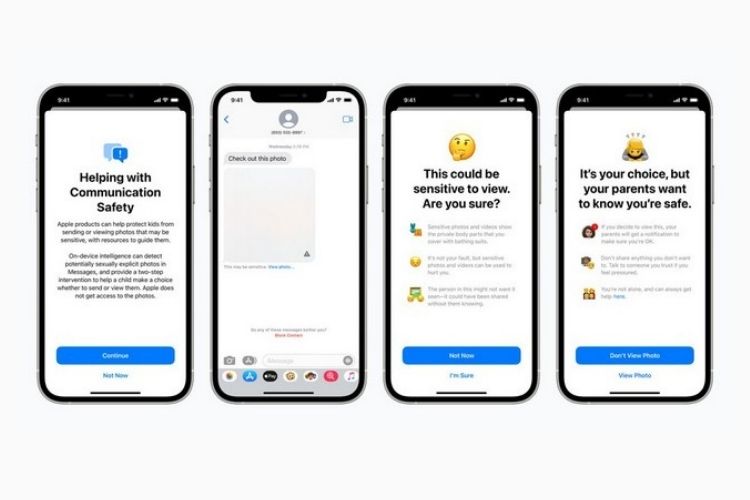

To recall, the feature was introduced along with the Communication Safety in Messages and expanded CSAM guidance in Siri, Search, and Spotlight. While the former is to refrain children from sending or receiving content containing nudity, the latter tries to provide more information on the topic when such terms are used. Both these features are still on the website and have been rolled out as part of the latest iOS 15.2 update.

Now, it remains to be seen how and when Apple plans to make the CSAM detection feature official. Since the feature hasn’t received a warm welcome from people, Apple has to be careful whenever it is ready for an official release. We will keep you posted on this, so stay tuned for updates.