Apple has always leaned towards user privacy for its products and services. But now, to protect minors from “predators who use communication tools to recruit and exploit them”, the Cupertino giant has announced that it will scan photos stored on iPhones and iCloud for child abuse imagery.

The system, as per a Financial Times report (paywalled), is called neuralMatch. It aims to leverage a team of human reviewers to contact law enforcement authorities when it finds images or content relating to Child Sexual Abuse Material (CSAM). The said system was reportedly trained using 200,000 images from the National Center for Missing and Exploited Children. As a result, it will scan, hash, and compare the photos of Apple users with a database of known images of child sexual abuse.

“According to people briefed on the plans, every photo uploaded to iCloud in the US will be given a ‘safety voucher,’ saying whether it is suspect or not. Once a certain number of photos are marked as suspect, Apple will enable all the suspect photos to be decrypted and, if apparently illegal, passed on to the relevant authorities,” said the Financial Times report.

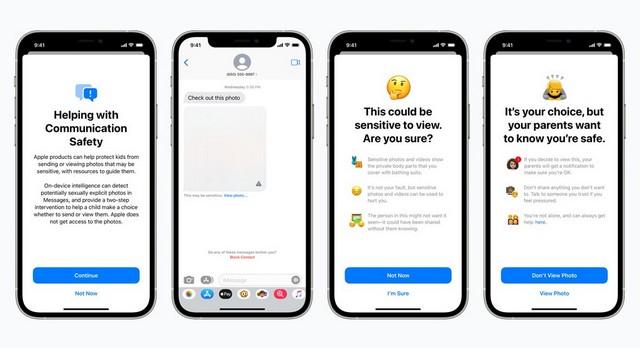

Now, following the report, Apple published an official post on its Newsroom to further explain how the new tools work. These tools are developed in collaboration with child safety experts and will use on-device machine learning to warn children as well as parents about sensitive and sexually explicit content on iMessage.

Furthermore, the Cupertino giant added that it will integrate “new technology” in iOS 15 and iPadOS 15 to detect CSAM images stored in iCloud Photos. If the system detects images or content relating to CSAM, Apple will disable the user account and send a report to the National Center for Missing and Exploited Children (NCMEC). However, if a user is mistakenly flagged by the system, they can file an appeal to recover the account.

Other than these, Apple is also expanding guidance in Siri and Search to help parents and children stay safe online and get relevant information during unsafe situations. The voice assistant will also be updated to interrupt searches relating to CSAM.

As for the availability of these new tools and systems, Apple says that it will initially roll out with its upcoming iOS 15 and iPadOS 15, WatchOS 8, and macOS Monterey updates in the US. However, there is no information on whether the company will expand the tools and system to other regions in the future or not.