There are several AI players in the market right now, including ChatGPT, Google Bard, Bing AI Chat, and many more. However, all of them require you to have an internet connection to interact with the AI. What if you want to install a similar Large Language Model (LLM) on your computer and use it locally? An AI chatbot that you can use privately and without internet connectivity. Well, with new GUI desktop apps like LM Studio and GPT4All, you can run a ChatGPT-like LLM offline on your computer effortlessly. So on that note, let’s go ahead and learn how to use an LLM locally without an internet connection.

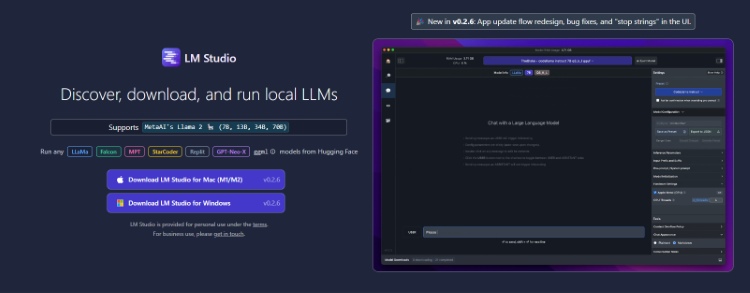

Run a Local LLM Using LM Studio on PC and Mac

1. First of all, go ahead and download LM Studio for your PC or Mac from here.

2. Next, run the setup file and LM Studio will open up.

3. Next, go to the “search” tab and find the LLM you want to install. You can find the best open-source AI models from our list. You can also explore more models from HuggingFace and AlpacaEval leaderboard.

4. I am downloading the Vicuna model with 13B parameters. Depending on your computer’s resources, you can download even more capable models. You can also download coding-specific models like StarCoder and WizardCoder.

5. Once the LLM model is installed, move to the “Chat” tab in the left menu.

6. Here, click on “Select a model to load” and choose the model you have downloaded.

7. You can now start chatting with the AI model right away using your computer’s resources locally. All your chats are private and you can use LM Studio in offline mode as well.

8. Once you are done, you can click on “Eject Model” which will offload the model from the RAM.

9. You can also move to the “Models” tab and manage all your downloaded models. So this is how you can locally run a ChatGPT-like LLM on your computer.

Run a Local LLM on PC, Mac, and Linux Using GPT4All

GPT4All is another desktop GUI app that lets you locally run a ChatGPT-like LLM on your computer in a private manner. The best part about GPT4All is that it does not even require a dedicated GPU and you can also upload your documents to train the model locally. No API or coding is required. That’s awesome, right? So let’s go ahead and find out how to use GPT4All locally.

1. Go ahead and download GPT4All from here. It supports Windows, macOS, and Ubuntu platforms.

2. Next, run the installer and it will download some additional packages during installation.

3. After that, download one of the models based on your computer’s resources. You must have at least 8GB of RAM to use any of the AI models.

4. Now, you can simply start chatting. Due to low memory, I faced some performance issues and GPT4All stopped working midway. However, if you have a computer with beefy specs, it would work much better.

Editor’s Note: Earlier this guide included the step-by-step process to set up LLaMA and Alpaca on PCs offline, but we included a rather tedious process. This process has been simplified by the tools we have suggested above.