Google Maps is obviously the most popular maps and navigation app, not just on Android but on iOS as well. It is the go-to app for getting directions and looking up for gas pumps, restaurants or hospitals nearby. However, a Twitter user has stumbled upon a derogatory search query that brings Google’s search algorithm into question, especially because it leaves young children vulnerable.

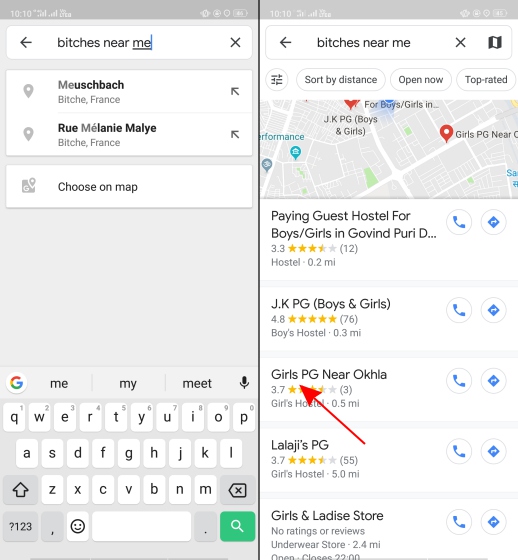

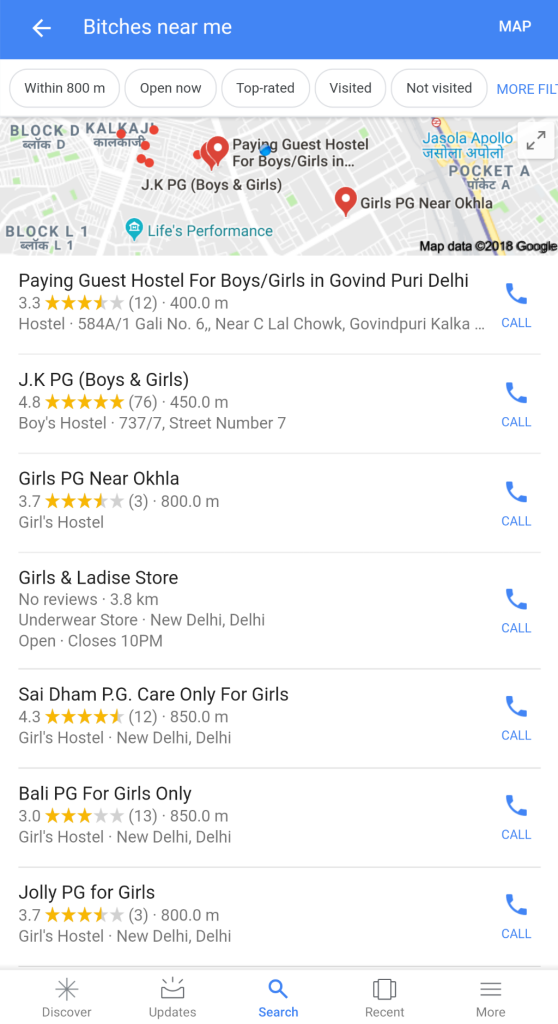

As reported by Twitter user @AHappyChipmunk, if you open Google Maps and search for “bitches near me,” and the search result is horrifying. The platform picks up this derogatory slang (that we condemn) and shows girls schools, colleges, hostels, and paying guest apartments, which makes you just pause for a second. In a country like India where the derogatory N-word is used by ignorant sections of the population to show off, search terms like bitches is not hard to imagine.

Google, can you explain why this fuckin exists pic.twitter.com/w0eslFoVSz

— flower emoji avalakki 🥀🌿 (@Woolfingitdown) November 25, 2018

This tweet does come as an awful surprise but seeing the logo of a troll Twitter account (@trollenku) made me skeptical of the above tweet, and I initially thought it’s a joke. Only on using the same search query ourselves did we realize it’s not just schools – Google’s algorithm returns every place near you that is related to girls – including girls-only rentals and other hostels.

The same is true for Google searches for that phrase:

One needs to understand that no one person in Google is responsible for this. Google’s algorithm seems to be correlating bitches to girls, which once again brings into question the bias of AI. After all a machine will only learn what you teach it.

The search result shows the dark side of AI and how pop culture can condition you to associate certain terms with a particular gender. It also highlights how the people who create these search algorithms think. Google should be particularly wary after the mass employee walkout related to sexual harassment at its offices earlier this month. The alleged ‘bro culture’at Google has also earned it a lawsuit or two. And this latest AI gaffe comes at a time when visibility is amplified thanks to the #MeToo movement in India.

A lot of people have tweeted to Google for more information on this algorithm goof-up. We will bring you the update once we get a response from the company.

Can I show the other side of A.i

hold your home button once google assistant is active ASk “Sunny Leone that type videos”

Rather than displaying what you asked it searches for ” Sunny Leone xxx videos”

(It happened with me)