Graphics in video games have come a long way. However, the expectations of gamers from a game’s graphics have also grown exponentially. These days, gamers demand a level of visual fidelity that was unimaginable 10 years ago outside of dedicated 3D render farms. And yet, for the most part, even the best looking games available today look as good as they do by utilizing a series of rendering shortcuts that produce extremely convincing approximations of how we perceive the world around us, and no matter how good or precise those approximations get, there’s still something lacking. And that is somewhat the lack of realism.

Well, the gaming industry is all set to experience its biggest boost in the form of Ray Tracing.

What is Ray Tracing?

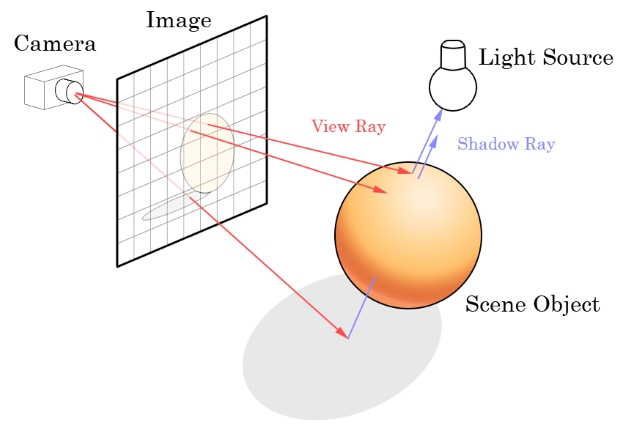

Ray-tracing is a method of calculating 3D scenes which mimics how we as humans perceive the world around us, or more specifically, how the light in our world is perceived by us. The technique that has been around as long as 3D rendering itself, and works by painstakingly calculating beams of light from a source to its destination and how that beam bounces off, permeates through and is occluded by, objects in a scene. The biggest advantage of Ray Tracing is that given enough time and computational power, resulting scenes can be indistinguishable from reality (or virtual reality).

How Ray Tracing Will Improve Graphics in Games?

The leaders in the graphics department, AMD, and Nvidia, both announced their respective technologies and advancements in Ray Tracing at this year’s GDC 2018. During GDC 2018’s “State of Unreal” opening session, Epic Games, in collaboration with NVIDIA and ILMxLAB, gave the first public demonstration of real-time ray tracing in Unreal Engine. On the other hand, AMD stated that it is collaborating with Microsoft to help define, refine and support the future of DirectX12 along with Ray Tracing.

During Nvidia’s presentation, the three companies presented an experimental cinematic demo using Star Wars characters from The Force Awakens and The Last Jedi built with Unreal Engine 4. The demonstration is powered by NVIDIA’s RTX technology for Volta GPUs, available via Microsoft’s DirectX Ray Tracing API (DXR). Furthermore, an iPad running ARKit was used as a virtual camera to draw focus to fine details in up-close views.

“Real-time ray tracing has been a dream of the graphics and visualization industry for years,” said Tony Tamasi, senior vice president of content and technology at NVIDIA. “With the use of NVIDIA RTX technology, Volta GPUs and the new DXR API from Microsoft, the teams have been able to develop something truly amazing, that shows that the era of real-time ray tracing is finally here.”

While AMD did not showcase any proof of concept of their progress, they have stated that they are also in coordination with Microsoft. Both the companies are said to make support for the Ray Tracing technology using the DXR API available to developers sometime later this year.

With such high-end graphics fidelity now being available to the developers’ discretion, we can only expect upcoming games to feature more realistic graphics with an accurate representation of the lighting conditions along with better sharpness. While this does call for a higher load on GPUs, Ray Tracing certainly promises to bridge the gap between reality and virtual reality.