Remember when Prisma was the ultimate “AI” image editing app out there? Yeah, we’ve certainly come a long way since then. With the rise of prompt-based AI image generators such as DALL-E and Midjourney, creating art and deepfakes is pretty much available to every one out there.

But there are limitations, aren’t there? After the initial novelty of asking Midjourney to imagine varied prompts and seeing what it throws out, it all gets rather boring. Or at least it did for me.

Narcissistic Energy?

Look, I am an introvert, which means I don’t really like going out much. But you know what I do like? Having pictures of myself in places I would probably never go to; heck, places I can’t go to as well.

Naturally, I wanted to ask AI tools to create images of me in different situations and places. However, I also didn’t want to upload images of myself on random websites in the hopes that the results might be good; and that’s when I read about Dreambooth.

Let the Games Begin…

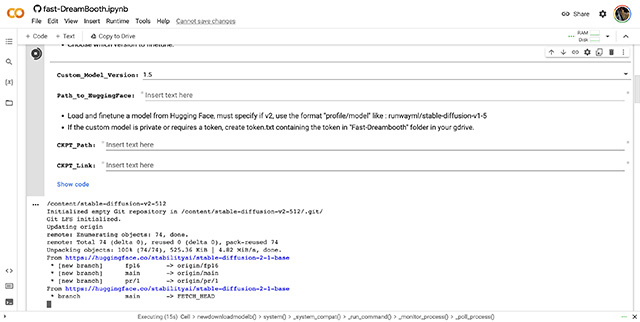

Turns out, really smart people have brought things like Stable Diffusion to the masses. What’s more, others have collaborated with them and made it possible for literally anyone with some patience to create their own Stable Diffusion models and run them, completely online.

So even though I have an M1 MacBook Air which by no means is intended to be used as a training machine for a deep-learning image generation model, I can run a Google Colab notebook and do all of that on Google’s servers — for free!

All I really needed, then, were a couple of pictures of myself, and that’s it.

Training my AI Image Generator

Training your own image generator is not difficult at all. There are a number of guides available online if you need help, and it’s basically all very straightforward. You need to just open the Colab notebook, upload your pictures, and start training the model. All of which happens quite quickly.

Okay, let’s be fair, the text encoder training happens quite quickly, within 5 minutes. However, training the UNet with the parameters set to default does take a fairly long time — close to 15-20 minutes. However, considering the fact that we are actually training an AI model to recognise and be able to draw my face, 20 minutes doesn’t sound like too much time.

While training, there are a bunch of ways you can go about customising just how much you want to train your model, and what I understood from reading experiences of a lot of people online, is that there’s no real “one-size-fits-all” strategy here. However, for basic use-cases, the default values seemed to work just fine for most people, and I stuck with those as well. Partly because I couldn’t really understand what most of the things meant, and partly because I just couldn’t be bothered to try training multiple models with different training parameters to see what resulted in the best outputs.

I was, afterall, simply looking for a fun AI image generator that can make some half-decent images of me.

Exceeds Expectations

I am not an AI expert by any stretch of the imagination. However, I do understand that training a stable-diffusion model on a Google Colab notebook with 8 jpegs of myself cropped to 512×512 pixels will not really result in something extraordinary.

How very wrong I was.

In my first attempt at using the model I trained, I started with a simple prompt that said “akshay”. The following is the image that was generated.

Not great, is it? But it’s also not that bad,right?

But then I started playing with some of the parameters available in the UI. There are multiple sampling methods, there are sampling steps, CFG Scale, scripts, and a lot more. Time to go a little crazy experimenting with different prompts and set ups for the model.

Clearly, the results of these images aren’t perfect, and anyone who has seen me can probably tell that these are not “my” images. However, they are close enough; and I didn’t even train the model with any particular care.

If I were to follow the countless guides on Reddit and elsewhere on the internet that talk about the ways you can improve training and get better results from Dreambooth and Stable Diffusion, these images might have turned out even more realistic (and arguably, scarier).

This AI Image Generator is Scarily Good

See, I’m all for improvements in AI technology. As a tech journalist, I have followed the ever-changing and improving field of consumer-facing AI over the last couple of years, and for the most part, I’m deeply impressed and optimistic.

However, seeing something like Dreambooth in action makes me wonder about the unethical ways in which AI and ML-based tools are readily available to basically anyone with access to a computer and the internet.

There’s no questioning that there are a lot of bad actors in the world. While innocent use-cases of such easily-accessible technology definitely exist, if there’s one thing I have learnt in my years of reporting on tech, it’s that putting a product into the hands of millions of people will undoubtedly result in a lot of undesired outcomes. At best, something unexpected, and at worst something outrightly disgusting.

Having the ability to create deepfake images of pretty much anyone as long as you can source 5 to 10 pictures of their face, is incredibly dangerous if used incorrectly. Think misinformation, misrepresentation, and even revenge porn — deepfakes can be used in all of these problematic ways.

Safegaurds? What Safegaurds?

It’s not just Dreambooth either. In themselves, and used well, Dreambooth and Stable Diffusion are incredible tools that allow us to experience what AI can do. But there are no real safeguards to this technology from what I have experienced so far. Sure, it won’t let you generate outright nudity in images; at least by default. However, there are plenty of extensions that will let you bypass that filter as well and create pretty much anything you can imagine, based on anyone’s identity.

Even without such extensions, you can easily get tools like this to create a wide-range of potentially disturbing and disreputable imagery of people.

What’s more, with a decently powerful PC, one can train their own AI models without any safeguards whatsoever and based on whatever training data they want to use — which means the trained model will create images that can be damning and harmful beyond imagination.

Deepfakes are nothing new. In fact, there is a vast trove of deepfake videos and media online. However, up until the recent past, creating deepfakes was limited to a relatively small (although still large) number of people that existed in the intersection of “people with capable hardware”, and the “technical know-how”.

Now, with access to free (limited-use) GPU compute units on Google Colab and the availability of tools like fast-dreambooth that let you train and use AI models on Google’s servers, that number of people will go up exponentially. It probably already has — that’s scary to me, and it should be to you as well.

What Can We Do?

That’s the question we should be asking ourselves at this point. Tools like DALL-E, Midjourney, and yes, Dreambooth and Stable Diffusion, are certainly impressive when used with common human decency. AI is improving by leaps and bounds — you can probably tell that by looking at the explosion of AI-related news in the past couple of months.

This is, then, a crucial point where we need to figure out ways to ensure AI is used ethically. How we can go about doing that is a question I’m not sure I have the answer to, but I do know that having used the fast-dreambooth AI image generator, and after seeing its capabilities, I am scared of how good it is, without even trying too hard.

you fine-tuned a model. stop the fear mongering

The colab notebooks aren’t bad. I used a stablediffusion pipeline to make a video for a song I wrote.

I found it pretty challenging to work with, and I feel like I was really just finding images that were in the database already. Like if the prompt of the image was a celebrity to begin with, wow it really drew that celebrity.

If I tried to specify something more intentional yet abstract. Red swirling skies over mountains, it did substantially less well, and took many guesses at a suitable prompt. It did pretty well with plain abstract things, but kind of boring.

Fun to play with though! Each individual image may not be all that original, but making a video out of them now that is creative.

I have also tried Topaz, which is basically AI photoprocessing that did pretty well, about the same as I could do with sharpening and what not, but took much less time. I hope they get that automated, I like looking at my photography images, but photo processing takes so much time, and really ruins the experience for me.

nice projects!!! please add more of them

Now ai image generator has gone to another level