Apple has released a bunch of new accessibility features for iOS and iPadOS. The Cupertino giant is doubling down on its commitment to making “technology accessible for everyone” with this update. The new accessibility enhancements, including, cognitive accessibility, Live Speech, and more will take advantage of the devices’ onboard hardware and machine learning capabilities. Continue reading below to know more.

New iPhone and iPad Accessibility Features

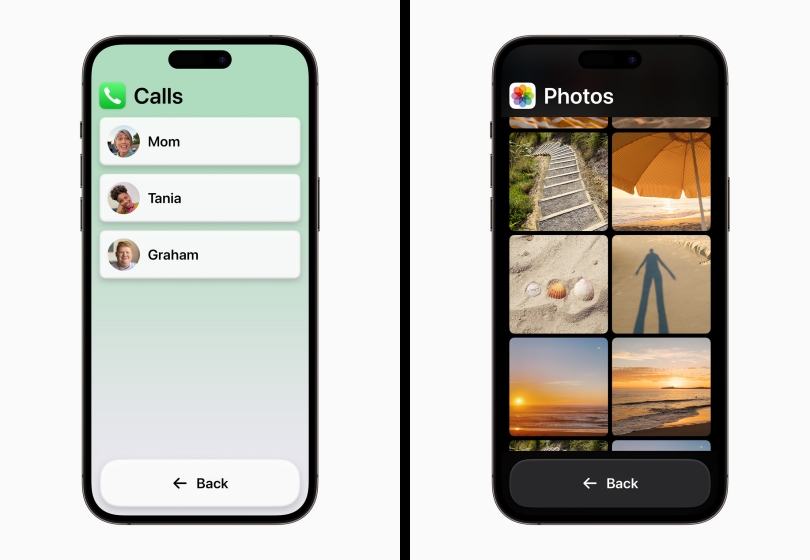

One of the recently announced features is Assistive Access for Cognitive Disability. As is evident from the name, users with complications like Autism, Alzheimer’s, and so on usually find it difficult to navigate elements on their smartphones. This feature will declutter apps and OS elements to only keep the core functionalities intact.

This feature will work with the Phone, FaceTime, Camera, and other daily driver apps. With this feature turned on, users will be able to interact with enlarged text and high-contrast buttons and only the functionalities that matter. This is built upon the data and feedback collected from trusted supporters and people with cognitive disorders.

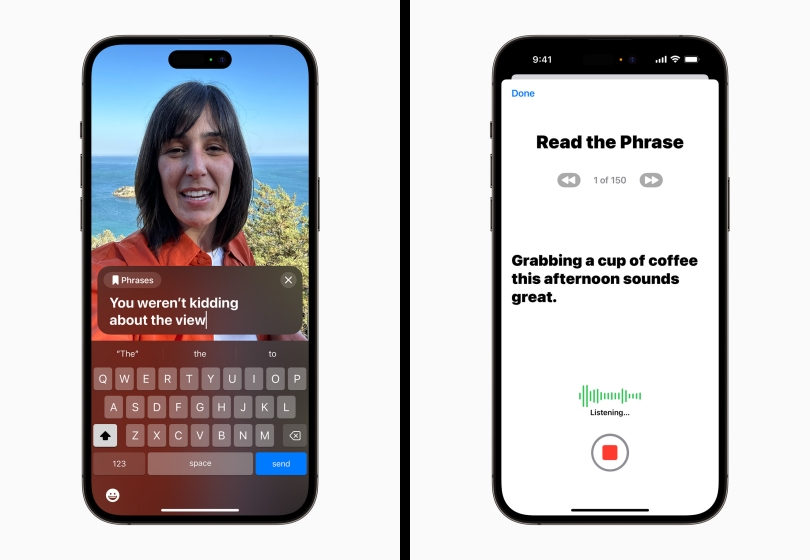

Another new feature is Live Speech and Personal Voice, which is aimed to make technology accessible to people with voice impairment like ALS. The Live Speech feature will allow users to type out their words and then let their iPhone or iPad voice them out loud. This feature will be useful during both in-person conversations and virtual conversations.

On the other hand, the Personal Voice feature is a notch above the Live Speech feature. It allows users with early stages of voice impairments to record an audio imprint of their own voice. This means that whenever you want to communicate with someone and you type your response out, it will play in your own voice rather than a generic robotic voice. The functionality uses on-device machine learning and a set of pre-defined prompts to create your voice imprint in a safe and secure manner.

For those users who have low vision or are losing their ability to see, Apple has introduced an accessibility feature dubbed Point and Speak for Detection Mode in Magnifier. This feature will enable users to receive audio prompts for elements with texts in them. For example, if you are typing on your keyboard, every keystroke will have a voice prompt associated with them. Apple is able to achieve this feat by combining the on-device LiDAR sensor, camera app, and machine learning capabilities. Using the magnifier feature, users can get voice prompts for any text elements that the camera app picks.

Some of the other announcements include Made for iPhone hearing devices for users with auditory impairments, Voice Control Guides for users to learn tips and tricks about Voice Control features, and much more. Apple will start rolling out these accessibility features to iPhone and iPad users by the end of this year. So, what do you make of these new accessibility enhancements? Do you think these features will benefit you in any way? Comment down your thoughts below.