The developments in personal voice assistants have enabled them to perform some high-level tasks like searching or controlling IoT devices. Yet, they are still not perfect as they cannot understand contextual commands like “What is this?” or “Turn that on”. The ambiguous “this” and “that” are terms that the voice assistants cannot really understand until you give them context. However, this can change really soon in the future as researchers of the Human-Computer Interaction Institute at Carnegie Mellon University have developed a new software that can immensely improve the power of voice assistants in the future.

WorldGaze is a new technology that is developed by, Sven Mayer, Gierad Lapu and Chris Harrison, a team of researchers of the Carnegie Mellon University. It can provide voice assistants with context with the user just looking at something. This will enable the assistants to understand contextual commands more easily.

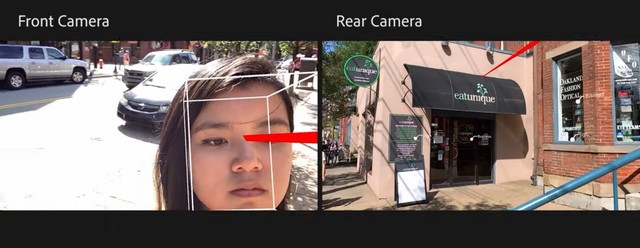

This is basically a software that uses both the front and rear cameras of mobile devices simultaneously provide context to the voice assistant software. The software uses the rear camera to capture what the user is seeing and uses the front camera to track the user’s head in 3D. In total, the software can get a 200-degree field of view from both the cameras.

Works on the Streets

Now, imagine you are walking down a street and see a restaurant. You ask your voice assistant, “When does this open?”. Do not expect to get the answer from the assistant as it cannot understand what “this” is in the question. However, if you walk down the same street with your WorldGaze integrated smartphone in hand and ask the same question as you look directly at the restaurant, the software will provide the relevant context to the voice assistant for it to understand the “this” in your question. As the software uses the front camera to track your “head gaze” in 3D, it knows what you’re looking at in a given point of time.

Works in Shops and Home

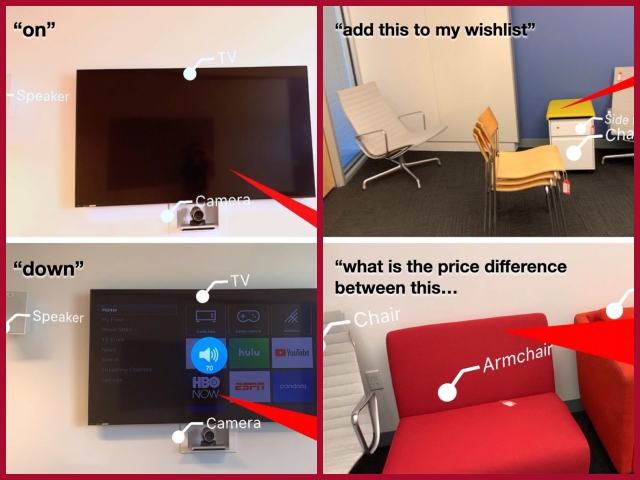

The same is applicable in retail stores as WorldGaze also comes with AR integration. So, if you’re in a retail shopping outlet, you can just look at any item and the software will tell you what it is by placing AR labels beside the items. So, when you are looking at a chair or table, you can use commands like “Add this to my shopping list” and the personal assistant in your mobile device will add the required item to the list without any further questions. Similarly, the voice assistants will also be able to understand contextual voice commands for home devices. So you can just point your smartphone towards a smart TV and say “Hey Google/Siri/Alexa, On” and the assistant will turn on the TV.

Tiring for the Arms…as of Now

Now, one of the biggest drawbacks of this technology is that it requires the user to always keep the smartphone in his/her hands. Otherwise, the software cannot use the cameras to work with. This is why the technology is still a proof-of-concept as of now. However, the researchers are planning to integrate the software into smart glasses in the future.

They have also made a YouTube video showcasing this amazing technology and you can check it out above. If you want to read the full research paper published by the team, you can check it out here.

Webhiggs Brings You the Latest Digital and Tech News Along With Science, Education and Health Updates. Read Globally Trending and Popular News. Visit our website: Webhiggs.