- Generative AI is an Artificial Intelligence system that can generate new content such as text, code, images, music, videos, audio, and more.

- Nearly all Generative AI models are transformer-based. From LLMs to image and video generation models, all are part of Generative AI.

- To train Generative AI models, massive amount of data is required to train large-scale neural networks.

The age of artificial intelligence is here, and Generative AI is playing a pivotal role in bringing unprecedented advancements to everyday technology. There already are several free AI tools that can assist you in generating incredible images, text, music, videos, and a lot more within a few seconds. But, what exactly is Generative AI and how is it fueling such rapid innovation? To learn more, follow our detailed explainer on Generative AI.

What is Generative AI?

As the name suggests, Generative AI means a type of AI technology that can generate new content based on the data it has been trained on. It can generate text, code, images, audio, videos, and synthetic data. Generative AI can produce a wide range of outputs based on user input or what we call “prompts“. Generative AI is basically a subfield of machine learning that can create new data from a given dataset.

If the large language model (LLM) has been trained on a massive volume of text, it can produce meaningful human language. The larger the data, the better will be the output. If the dataset has been cleaned prior to training, you are likely to get a nuanced response.

Similarly, if you have trained a model with a large corpus of images with image tagging, captions, and lots of visual examples, the AI model can learn from these examples and perform image classification and generation. This sophisticated system of AI programmed to learn from examples is called a neural network.

That said, there are different kinds of Generative AI models. These are Generative Adversarial Networks (GAN), Variational Autoencoder (VAE), Generative Pretrained Transformers (GPT), Autoregressive models, and much more. We are going to briefly discuss these generative models below.

At present, GPT aka Transformer-based models have gotten popular after the release of ChatGPT. Nearly all mainstream AI models including GPT-5, Gemini 3 Pro, Claude 4.5 Sonnet, all are built on the Transformer architecture. So in this explainer, we are going to mainly focus on Generative AI and GPT (Generative Pretrained Transformer).

What Are the Different Types of Generative AI Models?

Amongst all the Generative AI models, GPT is favored by many, but let’s start with GAN (Generative Adversarial Network). In this architecture, two parallel networks are trained, of which one is used to generate content (called generator) and the other one evaluates the generated content (called discriminator).

Basically, the aim is to pit two neural networks against each other to produce results that mirror real data. GAN-based models have been mostly used for image-generation tasks.

Next up, we have the Variational Autoencoder (VAE), which involves the process of encoding, learning, decoding, and generating content. For example, if you have an image of a dog, it describes the scene like color, size, ears, and more, and then learns what kind of characteristics a dog has. After that, it recreates a rough image using key points giving a simplified image. Finally, it generates the final image after adding more variety and nuances.

Moving to Autoregressive models, it’s close to the Transformer model but lacks self-attention. It’s mostly used for generating texts by producing a sequence and then predicting the next part based on the sequences it has generated so far. Next, we have Normalizing Flows and Energy-based Models as well. But finally, we are going to talk about the popular Transformer-based models in detail below.

What Is a Generative Pretrained Transformer (GPT) Model?

Before the Transformer architecture arrived, Recurrent Neural Networks (RNNs) and Convolutional Neural Networks (CNNs) like GANs, and VAEs were extensively used for Generative AI. In 2017, researchers working at Google released a seminal paper “Attention is all you need” (Vaswani, Uszkoreit, et al., 2017) to advance the field of Generative AI and make something like a large language model (LLM).

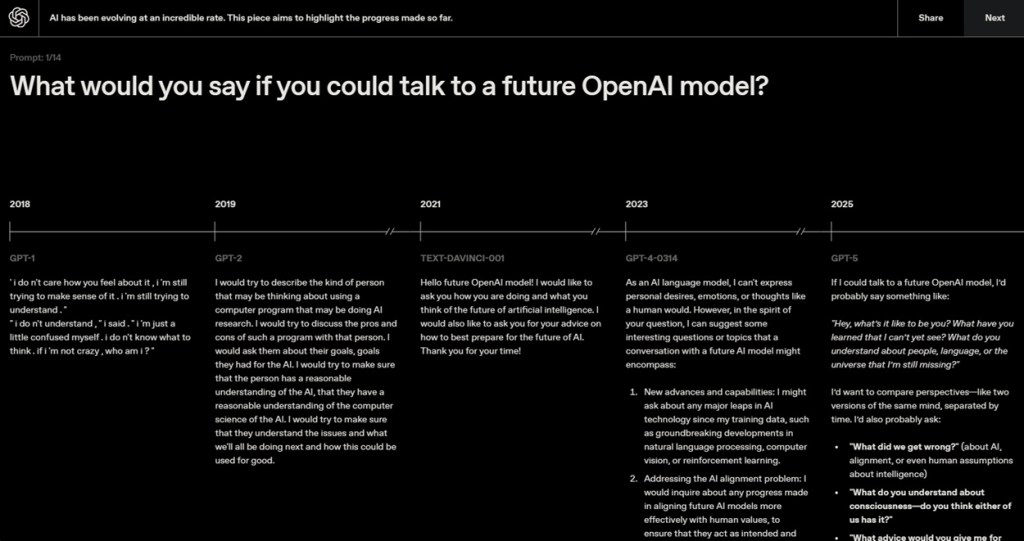

Google subsequently released the BERT model (Bidirectional Encoder Representations from Transformers) in 2018 implementing the Transformer architecture. At the same time, OpenAI released its first GPT-1 model based on the Transformer architecture.

So what was the key ingredient in the Transformer architecture that made it a favorite for Generative AI? As the paper is rightly titled, it introduced self-attention, which was missing in earlier neural network architectures.

What this means is that it basically predicts the next word in a sentence using a method called Transformer. It pays close attention to neighboring words to understand the context and establish a relationship between words.

Through this process, the Transformer develops a reasonable understanding of the language and uses this knowledge to predict the next word reliably. This whole process is called the Attention mechanism.

That said, keep in mind that LLMs are contemptuously called Stochastic Parrots (Bender, Gebru, et al., 2021) because the model is simply mimicking random words based on probabilistic decisions and patterns it has learned. It does not determine the next word based on logic and does not have any genuine understanding of the text.

Coming to the “pretrained” term in GPT, it means that the model has already been trained on a massive amount of text data before even applying the attention mechanism. By pre-training the data, it learns what a sentence structure is, grammar, patterns, facts, phrases, etc. It allows the model to get a good understanding of how language syntax works.

How Google and OpenAI Approach Generative AI?

Both Google and OpenAI are using Transformer-based models in Gemini and ChatGPT, respectively. They leverage the Transformer architecture to predict the next word in a sequence – from left to right. These are autoregressive models designed to generate coherent sentences, continuing the prediction until they have generated a complete response.

To offer Generative AI capabilities, OpenAI and Google use self-attention mechanisms and feed-forward neural networks to understand context and generate human-like text. Now, both companies have come up with multimodal AI models which are trained with text, images, videos, audio, code, all at once.

Applications of Generative AI

We all know that Generative AI has a huge application not just for text, but also for images, videos, audio generation, and much more. AI chatbots like ChatGPT, Gemini, Copilot, etc. leverage Generative AI. It can also be used for autocompletion, text summarization, virtual assistance, translation, etc. To generate music, we have seen examples like Google MusicLM and Suno.

Apart from that, from Midjourney to Stable Diffusion, all use Generative AI to create realistic images from text descriptions. In video generation too, Google’s Veo 3.1 or OpenAI’s Sora 2, nearly all models rely on Generative Adversarial Networks to generate lifelike videos.

Further, Generative AI has applications in 3D model generations and some of the popular models are DeepFashion and ShapeNet.

Not just that, Generative AI can be of huge help in drug discovery too. It can design novel drugs for specific diseases. We have already seen drug discovery models like AlphaFold 3, developed by Google DeepMind. Finally, Generative AI can be used for predictive modeling to forecast future events in finance and weather.

Limitations of Generative AI

While Generative AI has immense capabilities, it’s not without any failings. First off, it requires a large corpus of data to train a model. For many small startups, high-quality data might not be readily available. We have already seen companies such as Reddit, Stack Overflow, and Twitter closing access to their data or charging high fees for the access.

Recently, The Internet Archive reported that its website had become inaccessible for an hour because some AI startup started hammering its website for training data.

Apart from that, Generative AI models have also been heavily criticized for and bias. AI models trained on skewed data from the internet can overrepresent a section of the community. We have seen how AI photo generators mostly render images in lighter skin tones.

Then, there is a huge issue of deepfake video and image generation using Generative AI models. As earlier stated, Generative AI models do not understand the meaning or impact of their words and usually mimic output based on the data it has been trained on.

It’s highly likely that despite the best efforts and alignment, misinformation, deepfake generation, jailbreaking, and sophisticated phishing attempts using its persuasive natural language capability, companies will have a hard time taming Generative AI’s limitations.