While you may be familiar with RAM, the vital PC component that helps your computer run faster and not crash after opening more than 4 Chrome tabs, you must be wondering what is DRAM. Is it vastly different from RAM? The world of computers is full of jargon, and keeping up with the latest technologies (and their naming schemes) can be overwhelming. Fret not, for we are here to help! In this guide, let’s start by understanding what DRAM means and then look at the various types of DRAM.

What is DRAM?

DRAM, or Dynamic Random Access Memory, is a temporary memory bank for your computer where data is stored for quick, short-term access. When you perform any task on your PC, such as launching an application, the CPU on your motherboard pulls program data from your storage device (SSD/ HDD) and loads it onto the DRAM. Since DRAM is significantly faster than your storage devices (even SSDs), the CPU can read this data quicker, resulting in better performance. The speed and capacity of your DRAM help determine how fast applications can run and how efficiently your PC can multitask. Hence, having faster and higher capacity DRAM is always beneficial.

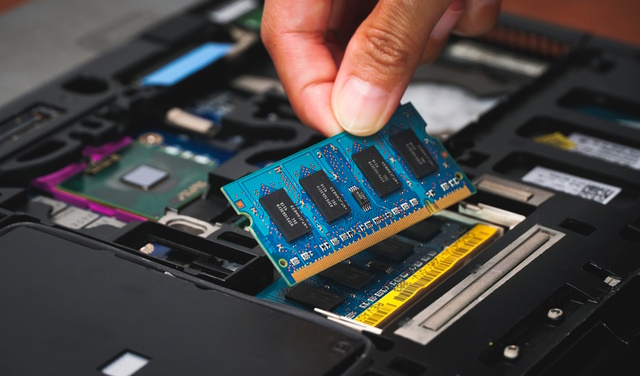

DRAM is the most common type of RAM we use today. The RAM DIMMs (dual in-line memory modules) or sticks that we install in our computers are, in fact, DRAM sticks. But what exactly makes DRAM dynamic? Let’s find out!

How Does DRAM Work?

By design, DRAM is volatile memory, which means it can only store data for a short period. Each DRAM cell is constructed using a transistor and a capacitor, with data stored in the latter. Transistors tend to leak small amounts of electricity over time, due to which the capacitors get discharged, losing the information stored within them in the process. Hence, the DRAM must be refreshed with a new electric charge every few milliseconds to help it hold onto stored data. When DRAM loses access to power (such as when you turn off your PC), all data stored within it is lost too. The need for constant refreshing of data is what makes DRAM dynamic. Static memory, like SRAM (Static Random Access Memory), does not need to be refreshed.

DRAM vs SRAM

There are two main classifications of primary memory — DRAM (Dynamic Random Access Memory) and SRAM (Static Random Access Memory). While we have learned what DRAM is and how it works, how does it compare to SRAM?

SRAM uses a six-transistor memory cell to store data, as opposed to the transistor and capacitor pair approach taken by DRAM. SRAM is an on-chip memory typically used as cache memory for CPUs. It’s considerably faster and more power efficient than most other types of RAM, including DRAM. However, it is also significantly more expensive to produce and isn’t user-replaceable/ upgradeable. DRAM, on the other hand, is often user replaceable. Here are the key differences between DRAM and SRAM:

| DRAM | SRAM |

|---|---|

| It uses capacitors to store data | It uses transistors to store data |

| Capacitors need constant refreshing to retain data | Doesn’t need refreshing as it doesn’t use capacitors to store data |

| Has slower speeds than SRAM | Significantly faster than DRAM |

| Cheaper to manufacture | Very expensive |

| DRAM devices are high-density | SRAM is low-density |

| Used as main memory | Used as cache memory for CPUs |

| Relatively lower heat output and power consumption than SRAM | High heat output and power consumption |

Types of DRAM

Now that you know how dynamic RAM works, let’s look at the five different types of DRAM:

ADRAM

Traditional DRAM modules operated asynchronously or independently. These were known as ADRAM (Asynchronous DRAM). Here, the memory would receive a request from the CPU to access certain information, then process that request and provide users access. Thus, the memory would only be able to handle requests one at a time, leading to delays.

SDRAM

SDRAM, or Synchronous DRAM, works by synchronizing its memory access with your CPU’s clock speeds. Here, your CPU can communicate with the RAM, letting it know which data it would require and when, so the RAM can have it ready beforehand. The RAM and the CPU thus work in tandem, resulting in faster data transfer rates.

DDR SDRAM

Here, DDR stands for Double-Data-Rate, not dance-dance-revolution. Although it certainly gave users a reason to dance when it first launched back in 2000.

As you may have guessed from the name, Double-Data-Rate SDRAM is a faster version of SDRAM with almost twice the bandwidth. It performs functions on both edges of the CPU clock signal (once when it rises and once when it falls), while standard SDRAM only does it at the rising edge of the CPU clock signal.

DDR memory had a 2-bit prefetch buffer (a memory cache that stores data before it’s needed), which resulted in significantly faster data transfer rates. As the years progressed, we got newer generations of DDR SDRAM.

DDR2 SDRAM

DDR2 memory was introduced in 2003 and was twice as fast as DDR, thanks to its improved bus signal. While it has the same internal clock speed as DDR memory, it has a 4-bit prefetch and can reach data transfer rates of 533 to 800MT/s. Also, DDR2 RAM can be installed in pairs for dual-channel configuration (that we gamers all know and love) for increased memory throughput.

DDR3 SDRAM

DDR3 first came around in 2007 and carried forward the trend of doubling the prefetch buffer (8-bit) and improving transfer speeds (800 to 2133MT/s). However, it had another trick up its sleeve — an approximately 40% reduction in power consumption. While DDR2 ran at 1.8 volts, DDR3 ran at anywhere between 1.35 to 1.5 volts. With better transfer speeds and lower power consumption, DDR3 became a terrific option for laptop memory.

DDR4 SDRAM

DDR4 launched 7 years later with lower operating voltages and significantly higher transfer rates than DDR3. It operated at 1.2 volts and had clock speeds ranging from 2133 to 5100MT/s (and even higher with overclocking). DDR4 is the most common type of DRAM used in computers today, although DDR5 is now picking pace.

DDR5 SDRAM

DDR5 is the most recent generation of DDR memory and was introduced in 2021. While the power consumption has not reduced drastically (at 1.1 volts), the performance has — DDR5 offers almost double the performance of DDR4.

One of the best things about DDR5 is its channel efficiency. Most DDR4 modules had a single 64-bit channel, which meant you needed to install 2 separate modules in the appropriate RAM slots on your motherboard to take advantage of the dual-channel configuration. Check out our article on single channel vs dual channel RAM to learn about the performance benefits of dual-channel memory.

DDR5 memory modules, on the other hand, come equipped with two independent 32-bit channels — meaning that a single stick of DDR5 RAM already runs in dual-channel.

DDR5 also changes how voltage regulation is handled. For previous generations of DRAM, the motherboard was responsible for voltage regulation. However, DDR5 modules have an onboard power management IC.

| SDRAM | DDR | DDR2 | DDR3 | DDR4 | DDR5 | |

|---|---|---|---|---|---|---|

| Prefetch Buffer | 1-Bit | 2-Bit | 4-Bit | 8-Bit | 8-Bit | 16-Bit |

| Transfer Rate (GB/s) | 0.8 – 1.3 | 2.1 – 3.2 | 4.2 – 6.4 | 8.5 – 14.9 | 17 – 25.6 | 38.4 – 51.2 |

| Data Rate (MT/s) | 100 – 166 | 266 – 400 | 533 – 800 | 1066 – 1600 | 2133 – 5100+ | 3200 – 6400 |

| Voltage | 3.3 | 2.5 – 2.6 | 1.8 | 1.35 – 1.5 | 1.2 | 1.1 |

ECC Memory

ECC stands for error-correcting code, and this type of memory contains extra bits compared to standard memory modules. For example, a standard DDR4 module has a 64 bit-channel. However, a DDR4 ECC module will have a 72-bit channel. The extra bits store an encrypted error-correcting code. But why do we need ECC in the first place? Do errors randomly and regularly occur?

While errors don’t usually occur on their own, they can be caused by interference. Electrical, magnetic, or even cosmic interference naturally present as background radiation in the atmosphere can cause single bits of DRAM to spontaneously flip to the opposite state.

Each byte is made of 8 bits. Let’s take 00100100, for instance. If interference causes one of these bits to change spontaneously, we might end up with — 00100101. Now, if these bits represent letters, the change in values will result in garbled or corrupted data. ECC constantly scans for such errors and corrects them.

The extra bits on the ECC RAM module store an encrypted error-correcting code when data is written to memory. When the same data is read, a new ECC is generated. The two are compared to determine if any bits have flipped. If they have, the ECC quickly corrects it, thus preventing data loss or corruption.

ECC memory is super valuable to businesses handling massive amounts of data, such as cloud service providers and financial institutions. Think about it — if a cloud service like iCloud or Google Drive fell victim to data corruption in their servers, all your precious photos and documents would be lost forever. We can’t have that now, can we? ECC memory is the way to go for servers and workstations.

Note: While ECC was an optional feature in DDR4 RAM, all DDR5 modules have onboard ECC.

Rambus DRAM

RDRAM was introduced back in the mid-1990s by Rambus, Inc., as an alternative to DDR SDRAM. It featured a synchronous memory interface like SDRAM and faster data transfer rates (266 to 800 MT/s). RDRAM was mainly used for video games and GPUs, and even Intel jumped on board the RDRAM train for a short period until they phased it out in 2001. It was succeeded by XDR (Extreme Data Rate) memory by Rambus, which was used in various consumer devices, including Sony’s PlayStation 3 console. XDR was then superseded by XDR2, but it failed to take off as the DDR standard became more widely adopted.

DRAM in SSDs: What’s the Use?

Unlike mechanical hard drives, SSDs do not store data on a spinning platter. Instead, in SSDs, data is written directly to their flash memory cells, known as NAND flash. Any data stored in an SSD is constantly moved around from one cell to another to ensure that no single memory cell gets worn out due to excessive reading and writing of data. While that’s essential for increasing the longevity and reliability of the drive, how do you know where any data is stored if it keeps moving around?

SSDs keep a virtual map of all your data, tracking where each file is stored. On a DRAM SSD, this data map is stored on the DRAM chip, which works like a super-fast cache. If you want to open a file, your PC can directly access the DRAM on the SSD to find it quickly.

However, on DRAM-less SSDs, the data map is stored on the NAND flash, which is much slower than DRAM. It will still be faster than a mechanical hard drive any day but slightly slower than a DRAM SSD.

DRAM: Advantages & Disadvantages

| Advantages | Disadvantages |

|---|---|

| Simple design, requiring transistor and capacitor pairs | Higher power consumption compared to other options |

| Affordability: DRAM is cheaper to produce than most other types of RAM, including SRAM | Volatility: DRAM loses all stored data once power is disconnected |

| Density: DRAM can hold more data than most other types of RAM, including SRAM | Data needs to be constantly refreshed |

DRAM in a Nutshell

We have discussed DRAM at length in this article, explaining not only how it works but also how it has evolved over the past 30+ years. To recap what we have learned, DRAM (Dynamic Random Access Memory) is a type of RAM that’s volatile, which means it will lose all stored data once power is cut. There have been five types of DRAM, with DDR5 being the latest one to pick up the pace. We recommend having at least 8GB of DRAM in your PC to keep it running smoothly and stutter-free. However, if you are a heavy gamer or power user, 16 gigabytes of RAM would suit you better. If you want to upgrade your RAM but aren’t sure if your PC has an available RAM slot, go through our article on how to check available RAM slots in Windows 11.