- Embracing its past roots, OpenAI has released two new open-weight AI models called gpt-oss-120b and gpt-oss-20b.

- The larger gpt-oss-120b model is comparable to o4-mini, and the smaller gpt-oss-20b is closer to o3-mini in performance.

- Both AI models are released under the Apache 2.0 license and can be downloaded from Hugging Face, Ollama, LM Studio, and more.

OpenAI has finally released two open-weight AI models called gpt-oss-120b and gpt-oss-20b since the release of GPT-2 in 2019, in a major shift. OpenAI claims that its new open-weight AI models deliver state-of-the-art performance in the open-source arena, outperforming similarly sized models. Both are MoE (Mixture of Experts) reasoning models with 5.1B and 3.6B active parameters.

The gpt-oss-120b AI model achieves performance along the lines of o4-mini, and the smaller gpt-oss-20b model is comparable to o3-mini. In its blog post, OpenAI writes, “They were trained using a mix of reinforcement learning and techniques informed by OpenAI’s most advanced internal models, including o3 and other frontier systems.”

Users and developers can run the gpt-oss-120b AI model locally on a single GPU with 80GB VRAM. And the smaller gpt-oss-20b model can run on laptops and smartphones with just 16GB of memory. In addition, these new OpenAI models have a context length of 128k tokens.

OpenAI says these models were trained largely on the English dataset, and excel at STEM, coding, and general knowledge. What is interesting is that both open-weight models support agentic workflows, including tool use like web search and Python code execution. It means that you can use these models locally to complete tasks on your computer without requiring an internet connection.

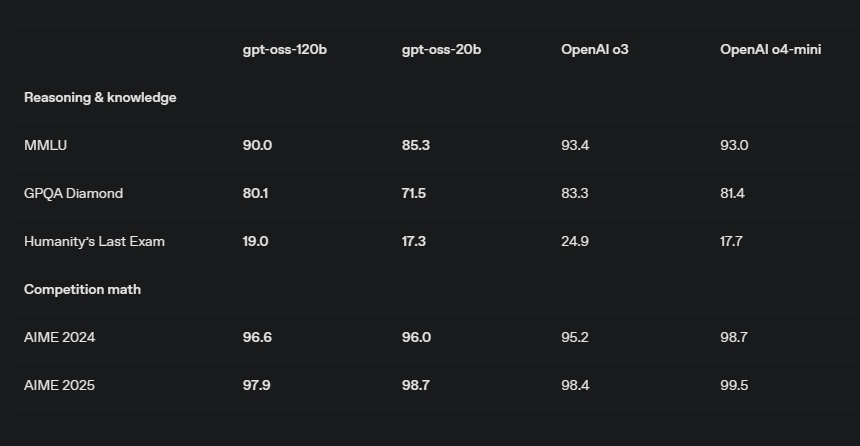

Talking about benchmarks, the larger gpt-oss-120b model nearly matches OpenAI’s flagship o3 model on Codeforces. In the challenging Humanity’s Last Exam benchmark, gpt-oss-120b scores 19% and o3 achieves 24.9% with tools access. Next, in GPQA Diamond, gpt-oss-120b got 80.1% while o3 scored 83.3%.

Looking at the benchmarks, OpenAI’s open-weight models do look powerful. It would be interesting to see how well gpt-oss-120b and gpt-oss-20b perform against Chinese open-weight AI models such as Qwen and DeepSeek. Both models are released under the Apache 2.0 license and can be downloaded from Hugging Face (120b | 20b).

If you want to try the smaller gpt-oss-20b AI model on your PC, you can install Ollama and run ollama run gpt-oss:20b to get started right away. These models are also available on LM Studio.