At Google I/O 2023, the search giant finally unveiled PaLM 2, its latest general-purpose large language model. PaLM 2 is the bedrock on which multiple Google products are now being built, including Google Generative AI Search, Duet AI in Google Docs and Gmail, Google Bard, and more. But what exactly is the Google PaLM 2 AI model? Is it better than GPT-4? Does it support plugins? To answer all your questions, go through our detailed explainer on the PaLM 2 AI model released by Google.

What is Google’s PaLM 2 AI Model?

PaLM 2 is the latest Large Language Model (LLM) released by Google that is highly capable in advanced reasoning, coding, and mathematics. It’s also multilingual and supports more than 100 languages. PaLM 2 is a successor to the earlier Pathways Language Model (PaLM) launched in 2022.

The first version of PaLM was trained on 540 billion parameters, making it one of the largest LLMs around. However, in 2023, Google came up with PaLM 2, which is much smaller in size, but it’s faster and more efficient than the competition.

In PaLM 2’s 92-page technical report, Google has not mentioned the parameter size, but according to a TechCrunch report, one of the PaLM 2 models is only trained on 14.7 billion parameters, which is far less than PaLM 1 and other competitive models. Some researchers on Twitter say that the largest PaLM 2 model is likely trained on 100 billion parameters, which is still much lower than the competition.

To give you an idea, OpenAI’s GPT-4 model is said to be trained on 1 trillion parameters, which is just mind-blowing. The GPT-4 model is at least 10 times larger than PaLM 2.

How Google Made PaLM 2 Smaller?

In the official blog, Google says that bigger is not always better and research creativity is the key to making great models. Here, by “research creativity,” Google is likely referring to Reinforcement Learning from Human Feedback (RLHF), compute-optimal scaling, and other novel techniques.

Google has not disclosed what research creativity it’s employing in PaLM 2, but looks like the company might be using LoRA (Low-Rank Adaptation), instruction tuning, and quality datasets to get better results despite using a relatively smaller model.

Overall, PaLM 2 is an LLM model that’s faster, relatively smaller, and cost-efficient because it serves fewer parameters. At the same time, it brings capabilities such as common sense reasoning, better logic interpretation, advanced mathematics, multilingual conversation, coding mastery, and more. That was the basics of the PaLM 2 model, now let’s go ahead and learn about its features in detail.

What are the Highlight Features of PaLM 2?

As mentioned above, PaLM 2 is faster, highly efficient, and has a lower serving cost. Apart from that, it brings several advanced capabilities. To begin with, PaLM 2 is very good at common sense reasoning. Google, in fact, says that PaLM 2’s reasoning capabilities are competitive with GPT-4. Testing in the WinoGrande commonsense test, PaLM 2 scored 90.2 whereas GPT-4 achieved 87.5. In the ARC-C test, GPT-4 scores a notch higher and achieves 96.3 whereas PaLM 2 scores 95.1. In other reasoning tests, including DROP, StrategyQA, CSQA, and a few others, PaLM 2 outperforms GPT-4.

Not just that, due to its multilingual ability, PaLM 2 can understand idioms, poems, nuanced texts, and even riddles in other languages. It goes beyond the literal meaning of words and understands the ambiguous and figurative meaning behind words. This is because PaLM 2 has been pre-trained on parallel multilingual texts of various languages. In addition, the corpus of high-quality multilingual data makes PaLM 2 even more powerful. As a result, translation and other such applications work far better on PaLM 2.

Next, we come to its coding capabilities. Google says that PaLM 2 is again trained on a large corpus of quality source code datasets available in the public domain. As a result, it supports more than 20 programming languages, which include Python, JavaScrupt, C, C++, and even older languages like Prolog, Fortran, and Verilog. It can also generate code, offer context-aware suggestions, translate code from one language to another, add functions with just a comment, and more.

What Can the PaLM 2 Model Do?

First of all, let me say that PaLM 2 has been built to make it adaptable for different use cases. Google announced that PaLM 2 will come in four different models — Gecko, Otter, Bison, and Unicorn; Gecko being the smallest and Unicorn being the largest.

Gecko is so lightweight that it can run even on smartphones while being completely offline. It can process 20 tokens per second on a flagship phone, which is around 16 words per second. That’s awesome, right? Imagine the kind of AI-powered on-device applications you can run on your smartphone without requiring an active internet connection or beefy specs.

Apart from that, PaLM 2 can be fine-tuned to make a domain-specific model right away. Google has already created Med-PaLM 2, a medical-specific LLM fine-tuned on PaLM 2 that received “Expert” level competency on U.S. Medical Licensing Exam-style questions. It achieved an accuracy of 85.4% in the USMLE test, even higher than GPT-4 (84%). That said, do bear in mind that GPT-4 is a general-purpose LLM and not fine-tuned for medical knowledge.

Moving ahead, Google has added multimodal capability to Med-PaLM 2. It can analyze images like X-rays and mammograms and come up with conclusions, in line with expert clinicians. That’s pretty remarkable as it can bring much-needed medical access to remote areas around the world. Besides that, Google has developed Sec-PaLM, a specialized version of PaLM 2 for cybersecurity analysis and to quickly detect malicious threats in no time.

PaLM 2-Powered Google Products

These are all different use cases of PaLM 2 in different spheres and industries. As for individual consumers, you can experience PaLM 2 in action through Google Bard, Google Generative AI Search, and Duet AI in Gmail, Google Docs, and Google Sheets. Google recently moved Bard, its interactive AI chatbot, to PaLM 2 and opened up access to more than 180 countries. You can follow our article and learn how to use Google Bard right now.

As for using PaLM 2 in Gmail, Google Docs, and Sheets (Google is calling it Duet AI for Google Workspace), you need to join the waitlist to take advantage of the AI-powered features. Finally, for developers, Google has released the PaLM API which is based on the PaLM 2 model. You can sign up right now to use the PaLM API in your products. It can generate more than 75 tokens per second and has a context window of 8,000 tokens.

PaLM 2 vs GPT-4: How Do the AI Models Compare?

Before comparing the capabilities, one thing is clear — PaLM 2 is fast. I mean, it’s fast at responding to queries, even complex reasoning questions. Not just that, it offers three drafts at once, in case you are not satisfied with the default response. Thus, from an efficiency and computing standpoint, Google is a step or two ahead of OpenAI. Read about all the new features of Google Bard AI here.

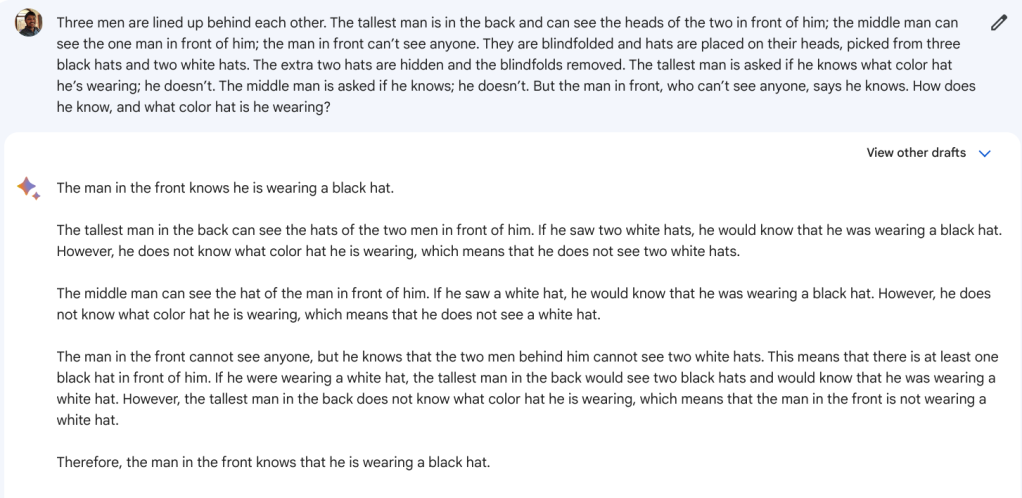

As far as capabilities are concerned, we tested the reasoning skill of both the models and PaLM 2-powered Google Bard truly shines in such tests. Out of 3 reasoning questions, Bard correctly answered all 3 of them whereas ChatGPT-4 could only answer 1 correct answer. In one instance, Bard’s assessment was wrong (seemed to hallucinate), but somehow gave the right answer.

Apart from that, for coding tasks, I asked Bard to find a bug in the code I provided, but it gave a lengthy response to fix the issues, which turned out to be entirely wrong. However, ChatGPT-4 instantly identified the coding syntax, spotted the error, and fixed the code without further prompting.

I also assigned a task to both models to implement Dijkstra’s algorithm in Python and both models generated error-free code. I compiled both of them and none of the functions threw any errors. That said, ChatGPT-4 generates clean code with some examples whereas Bard only implements the barebone function.

Limitations of Google PaLM 2

Now coming to limitations, we already know that ChatGPT plugins are powerful and can quickly enhance GPT-4’s capabilities by miles. With just the Code Interpreter Plugin, users are able to do so much more with ChatGPT. Indeed, Google has also announced “Tools” similar to plugins, but they are not live yet and third-party support seems lackluster at present. In tandem, developer support is huge for OpenAI.

Next, GPT-4 is a multimodal model meaning it can analyze both texts and images. Multimodality has a number of interesting use cases. You can ask ChatGPT to study a graph, table, medical report, medical imaging, and more. Yes, the feature has not been added to ChatGPT yet, but we have seen an early demo and it seemed very impressive. On the other hand, PaLM 2 is not a multimodal model as it only deals with texts.

The search giant has fine-tuned PaLM 2 to create Med-PaLM 2 which is indeed multimodal, but it’s not open for public use and is limited to the medical domain only. Google says that the next-generation model called Gemini will be multimodal with groundbreaking features, but it’s still being trained and is months away from release. Google has promised to bring Lens support to Bard, but it’s not the same as an AI-powered visual model.

Finally, in comparison to GPT-4, Google Bard hallucinates a lot (see an example here, where Bard thinks that the PaLM AI model is created by OpenAI). It makes up information on the fly and confidently responds with false information. GPT-3 and GPT-3.5 also had a similar problem, but OpenAI has managed to reduce hallucination by 40% with the release of GPT-4. Google needs to address the same hallucination problem “boldly and responsibly.”

Conclusion: PaLM 2 or GPT-4?

In summation, Google’s PaLM 2 AI model has improved in some areas such as advanced reasoning, translation, multilingual capabilities, maths, and coding. Moreover, it has the added benefit of running a smaller model with fast performance and low serving costs. However, to reach feature parity with GPT-4, Google needs to add multimodality, third-party tools (Plugins), address the hallucination issue, and make its AI models as developer-friendly as possible.