- Google has introduced a highly optimized Gemini Robotics On-Device model that can locally run on a robotics device.

- Across benchmarks, the On-Device model performs nearly on par with the state-of-the-art Gemini Robotics model.

- Google is offering access to the On-Device model via the Gemini Robotics SDK.

Following the release of Gemini Robotics in March which is Google DeepMind’s state-of-the-art vision-language-action (VLA) model, the company has now introduced Gemini Robotics On-Device. As the name suggests, it’s a VLA model, but it can run locally on robotic devices. It doesn’t require the internet to access the model on the cloud.

The Gemini Robotics On-Device model has been efficiently trained and shows “strong general-purpose dexterity and task generalization.” This AI model can be used for bi-arm robots and can be fine-tuned for new tasks. Since it runs locally, the low-latency inference can help in rapid experimentation involving dexterous manipulation.

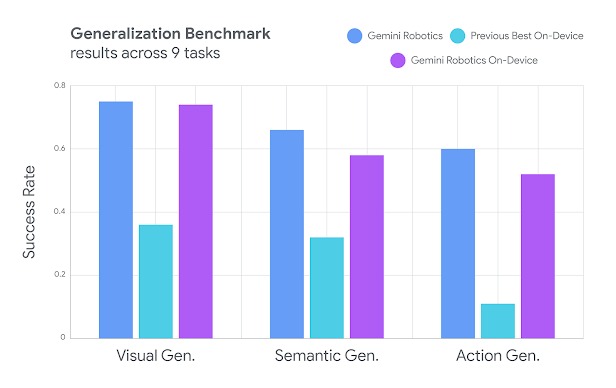

It can achieve highly dexterous tasks like unzipping bags or folding clothes. The On-Device model showcases strong visual, semantic, and behavioral generalization across a wide range of tasks. In fact, in Google’s own Generalization Benchmark, the Gemini Robotics On-Device model performs nearly on par with its cloud model, Gemini Robotics.

Moreover, whether following instructions or fast adaptation to tasks, the On-Device model achieves comparable performance. It goes on to show that Google DeepMind has trained a frontier AI model and a highly optimized local model for robotics. If you are interested in testing the model, you can sign up for Google’s trusted tester program and access the Gemini Robotics SDK.