- Artificial Intelligence hallucinations are false, misleading, and fabricated information generated by AI systems.

- AI hallucinations occur when the AI system doesn't have complete information on a topic. Smaller AI models tend to hallucinate more.

- Researchers say that AI models should be taught to say "I don't know" when they are uncertain to reduce hallucination.

In early 2024, Air Canada had to pay a customer because its AI chatbot “hallucinated” and invented a policy that didn’t exist. The AI chatbot promised a discount which was not available after booking the ticket. It was one of the major cases where Artificial Intelligence hallucinations cost the airline $812 in damages. But what exactly is AI hallucinations and why do they occur? To understand this problem, let’s go through our deep dive.

What are Artificial Intelligence Hallucinations?

Artificial Intelligence hallucinations occur when the AI system generates false, misleading, or entirely fabricated information with full confidence. It’s a challenging problem in the AI field where AI chatbots confidently provide an incorrect response as verified facts. What makes AI hallucinations dangerous is that they sound very plausible and convincing.

Note that AI hallucinations are not grammar errors or typos. The presented facts look factual on the surface and they are grammatically sound. In fact, a recent study shows that when AI models hallucinate, they use more confident language than when providing factual information.

And Artificial Intelligence hallucinations are not limited to text output only. AI hallucinations occur in AI-generated images and videos too, where you notice multiple fingers or weird human features. These are all examples of AI hallucinations where the AI system makes up fact which don’t exist.

Why Do AI Chatbots Hallucinate?

To understand why AI chatbots hallucinate, we must understand how large language models (LLMs) work. Unlike humans, LLMs don’t understand words and information and lack genuine understanding of the world. They are trained on a vast amount of text and LLMs identify patterns from the training dataset. Now, using statistical probabilities, LLMs try to predict what word should come next in a sequence.

So when there is limited data in the training dataset, meaning when the AI model is small, there is a high chance of AI hallucinations. Basically, when the AI model doesn’t have accurate information about a specific topic, it tries to guess the answer, often giving incorrect answers.

Apart from that, AI models are trained on a vast amount of text, sourced from the internet. Now, if there is false information on the internet or bias in the text, the AI chatbot learns to mimic that information without understanding the text. So data cleaning is an important way to reduce hallucinations.

OpenAI recently published a paper saying the language models hallucinate because they are trained to predict the next word, not distinguish truth from falsehood. Secondly, they are rewarded for guessing and giving an answer, instead of saying “I don’t know” when facts are uncertain.

So, during post-training, AI models shouldn’t be punished for saying “I am not sure” or “I don’t know.” Instead, they should be rewarded and that will change the model’s behavior when they are uncertain and reduce hallucinations.

Which AI Models Have the Lowest Hallucination Rates?

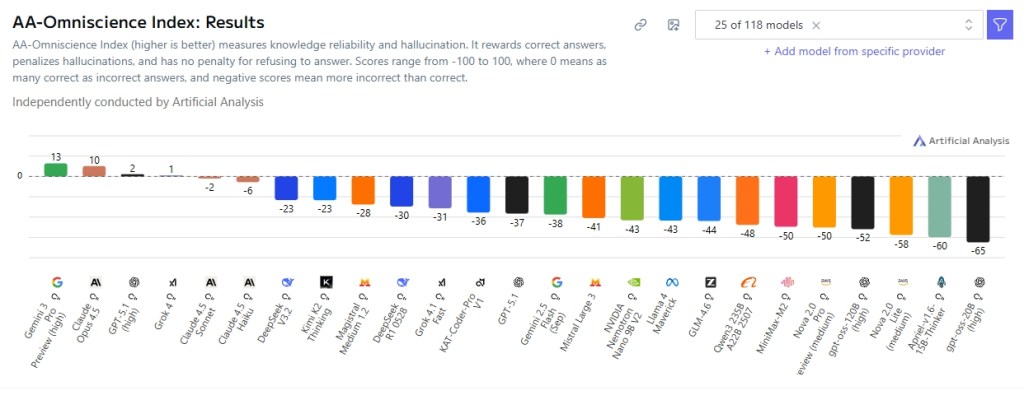

Artificial Analysis recently introduced a new Omniscience Index which measures knowledge and hallucination across different domains. In this benchmark, Google’s Gemini 3 Pro, Anthropic’s Claude Opus 4.5, and OpenAI’s GPT-5.1 High showed lower hallucination rates. So if you are looking for an AI model that hallucinates less, you can try one of these AI chatbots.

Real-World Consequences of Artificial Intelligence Hallucinations

After the launch of ChatGPT in late 2022, Artificial Intelligence hallucinations have caused serious damages around the world. In 2023, a lawyer used ChatGPT to draft court filings without realizing that most of the cited cases are fake. ChatGPT made up non-existent court cases with realistic names.

In 2023, Google’s initial AI chatbot Bard gave an incorrect answer in a promotional video, claiming that the James Webb Space Telescope had taken the first images of a planet outside the solar system. Due to this mistake or “hallucination”, Google lost $100 billion in stock market value.

In 2025, the the Chicago Sun-Times published a summer reading list using AI, but 10 of the 15 titles were found fake. Later, the newspaper removed the online edition of the article. And just recently, Deloitte provided a report to the Australian government for $440,000, but it was found that the report was made using AI and contains multiple fabricated citations and errors.