- AnythingLLM is a program that lets you chat with your documents locally. It works even on budget computers.

- Unlike command-line solutions, AnythingLLM has a clean and easy-to-use GUI interface.

- You can feed PDFs, CSVs, TXT files, audio files, spreadsheets, and a variety of file formats.

To run a local LLM, you have LM Studio, but it doesn’t support ingesting local documents. There is GPT4ALL, but I find it much heavier to use and PrivateGPT has a command-line interface which is not suitable for average users. So comes AnythingLLM, in a slick graphical user interface that allows you to feed documents locally and chat with your files, even on consumer-grade computers. I used it extensively and found AnythingLLM much better than other solutions. Here is how you can use it.

Note:

AnythingLLM runs on budget computers as well, leveraging both CPU and GPU. I tested it on an Intel i3 10th-gen processor with a low-end Nvidia GT 730 GPU. That said, token generation will be slow. If you have a powerful computer, it would be much faster at generating output.

Download and Set Up AnythingLLM

- Go ahead and download AnythingLLM from here. It’s freely available for Windows, macOS, and Linux.

- Next, run the setup file and it will install AnythingLLM. This process may take some time.

- After that, click on “Get started” and scroll down to choose an LLM. I have selected “Mistral 7B”. You can download even smaller models from the list below.

- Next, choose “AnythingLLM Embedder” as it requires no manual setup.

- After that, select “LanceDB” which is a local vector database.

- Finally, review your selections and fill out the information on the next page. You can also skip the survey.

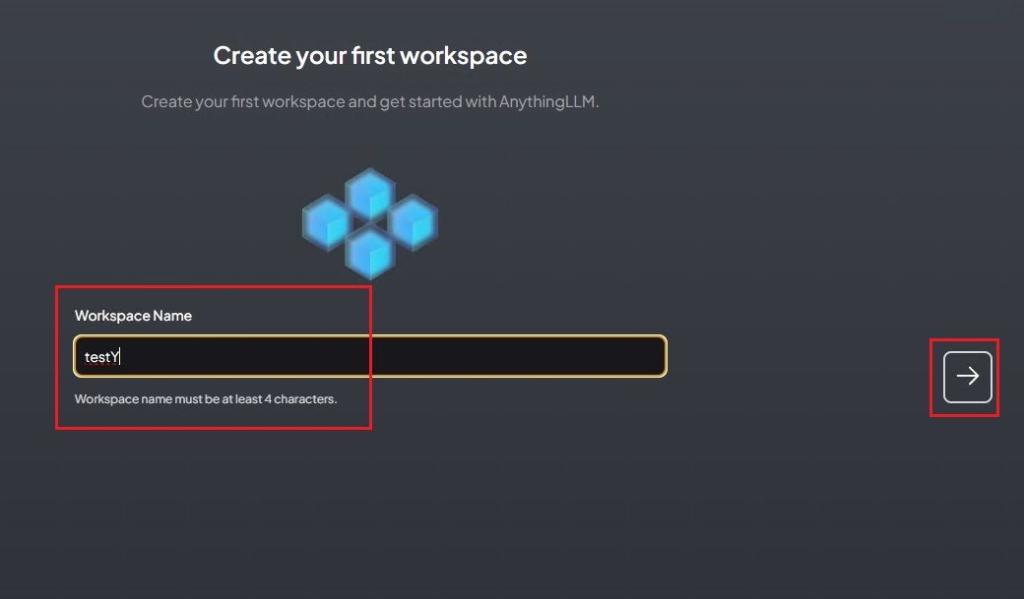

- Now, set a name for your workspace.

- You are almost done. You can see that Mistral 7B is being downloaded in the background. Once the LLM is downloaded, move to the next step.

Upload Your Documents and Chat Locally

- First of all, click on “Upload a document“.

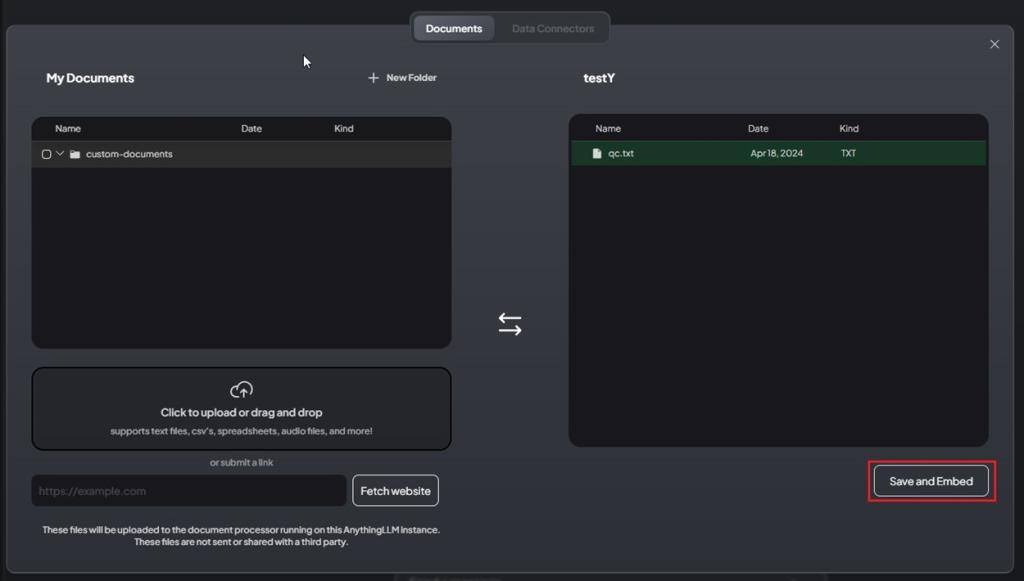

- Now, click to upload your file or drag and drop them. You can upload any file format including PDF, TXT, CSV, audio files, etc.

- I have uploaded a TXT file. Now, select the uploaded file and click on “Move to Workspace“.

- Next, click on “Save and Embed“. After that, close the window.

- You can now start chatting with your documents locally. As you can see, I asked a question from the TXT file, and it gave a correct reply citing the text file.

- I further asked some questions, and it responded accurately.

- The best part is that you can also add a website URL and it will fetch the content from the website. You can now start chatting with the LLM.

So this is how you can ingest your documents and files locally and chat with the LLM securely. No need to upload your private documents on cloud servers that have sketchy privacy policies. Nvidia has launched a similar program called Chat with RTX, but it only works with high-end Nvidia GPUs. AnythingLLM brings local inferencing even on consumer-grade computers, taking advantage of both CPU and GPU on any silicon.